mirror of

https://github.com/DominikDoom/a1111-sd-webui-tagcomplete.git

synced 2026-01-27 03:29:55 +00:00

Compare commits

76 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

c3f53e1a60 | ||

|

|

951e82f055 | ||

|

|

cd52a0577c | ||

|

|

873a15b5f6 | ||

|

|

811d4622e9 | ||

|

|

296b9456cc | ||

|

|

32c7749a5f | ||

|

|

afe3f23afa | ||

|

|

2571a4f70a | ||

|

|

f8e15307c6 | ||

|

|

95ebde9fce | ||

|

|

c08746a2c0 | ||

|

|

39abf1fe3a | ||

|

|

b047095f80 | ||

|

|

caf65bfda0 | ||

|

|

e7fa5aca18 | ||

|

|

f026e7631c | ||

|

|

15538336c9 | ||

|

|

12340c37cb | ||

|

|

d1fff7bfa7 | ||

|

|

647d3f7ec3 | ||

|

|

90664d47bf | ||

|

|

bc56c3ca72 | ||

|

|

823958507b | ||

|

|

712c4a5862 | ||

|

|

b5817b8d4a | ||

|

|

6269c40580 | ||

|

|

c1fb4619a4 | ||

|

|

a7c5c38c26 | ||

|

|

c14260c1fe | ||

|

|

76bd983ba3 | ||

|

|

2de1c720ee | ||

|

|

37e1c15e6d | ||

|

|

c16d110de3 | ||

|

|

f2c3574da7 | ||

|

|

b4fe4f717a | ||

|

|

9ff721ffcb | ||

|

|

f74cecf0aa | ||

|

|

b540400110 | ||

|

|

d29298e0cc | ||

|

|

cbeced9121 | ||

|

|

8dd8ccc527 | ||

|

|

beba0ca714 | ||

|

|

bb82f208c0 | ||

|

|

890f1a48c2 | ||

|

|

c70a18919b | ||

|

|

732a0075f8 | ||

|

|

86ead9b43d | ||

|

|

db3319b0d3 | ||

|

|

a588e0b989 | ||

|

|

b22435dd32 | ||

|

|

b0347d1ca7 | ||

|

|

fad8b3dc88 | ||

|

|

95eb9dd6e9 | ||

|

|

93ee32175d | ||

|

|

86fafeebf5 | ||

|

|

29d1e7212d | ||

|

|

8e14221739 | ||

|

|

cd80710708 | ||

|

|

3e0a7cc796 | ||

|

|

98000bd2fc | ||

|

|

d1d3cd2bf5 | ||

|

|

b70b0b72cb | ||

|

|

a831592c3c | ||

|

|

e00199cf06 | ||

|

|

dc34db53e4 | ||

|

|

a925129981 | ||

|

|

e418a867b3 | ||

|

|

040be35162 | ||

|

|

316d45e2fa | ||

|

|

8ab0e2504b | ||

|

|

b29b496b88 | ||

|

|

e144f0d388 | ||

|

|

ae01f41f30 | ||

|

|

fb27ac9187 | ||

|

|

770bb495a5 |

1

.gitignore

vendored

1

.gitignore

vendored

@@ -1 +1,2 @@

|

||||

tags/temp/

|

||||

__pycache__/

|

||||

|

||||

103

README.md

103

README.md

@@ -14,11 +14,12 @@ Since some Stable Diffusion models were trained using this information, for exam

|

||||

You can install it using the inbuilt available extensions list, clone the files manually as described [below](#installation), or use a pre-packaged version from [Releases](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/releases).

|

||||

|

||||

## Common Problems & Known Issues:

|

||||

- If `replaceUnderscores` is active, the script will currently only partially replace edited tags containing multiple words in brackets.

|

||||

For example, editing `atago (azur lane)`, it would be replaced with e.g. `taihou (azur lane), lane)`, since the script currently doesn't see the second part of the bracket as the same tag. So in those cases you should delete the old tag beforehand.

|

||||

- Depending on your browser settings, sometimes an old version of the script can get cached. Try `CTRL+F5` to force-reload the site without cache if e.g. a new feature doesn't appear for you after an update.

|

||||

|

||||

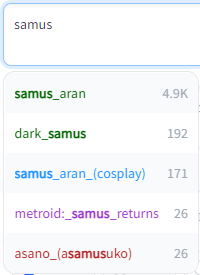

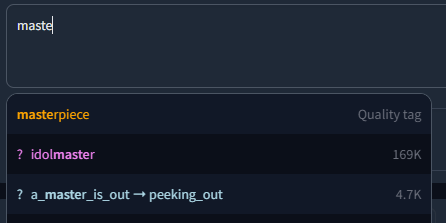

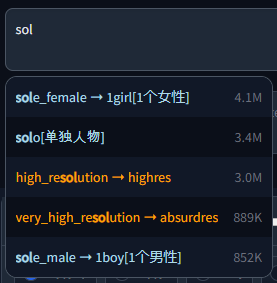

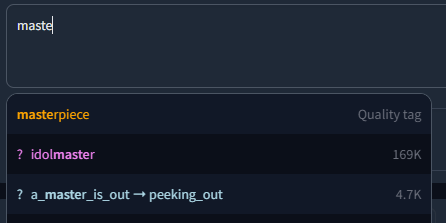

## Screenshots

|

||||

Demo video (with keyboard navigation):

|

||||

## Screenshots & Demo videos

|

||||

<details>

|

||||

<summary>Click to expand</summary>

|

||||

Basic usage (with keyboard navigation):

|

||||

|

||||

https://user-images.githubusercontent.com/34448969/200128020-10d9a8b2-cea6-4e3f-bcd2-8c40c8c73233.mp4

|

||||

|

||||

@@ -30,32 +31,61 @@ Dark and Light mode supported, including tag colors:

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

## Installation

|

||||

### As an extension (recommended)

|

||||

Either clone the repo into your extensions folder:

|

||||

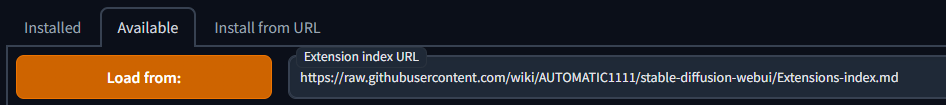

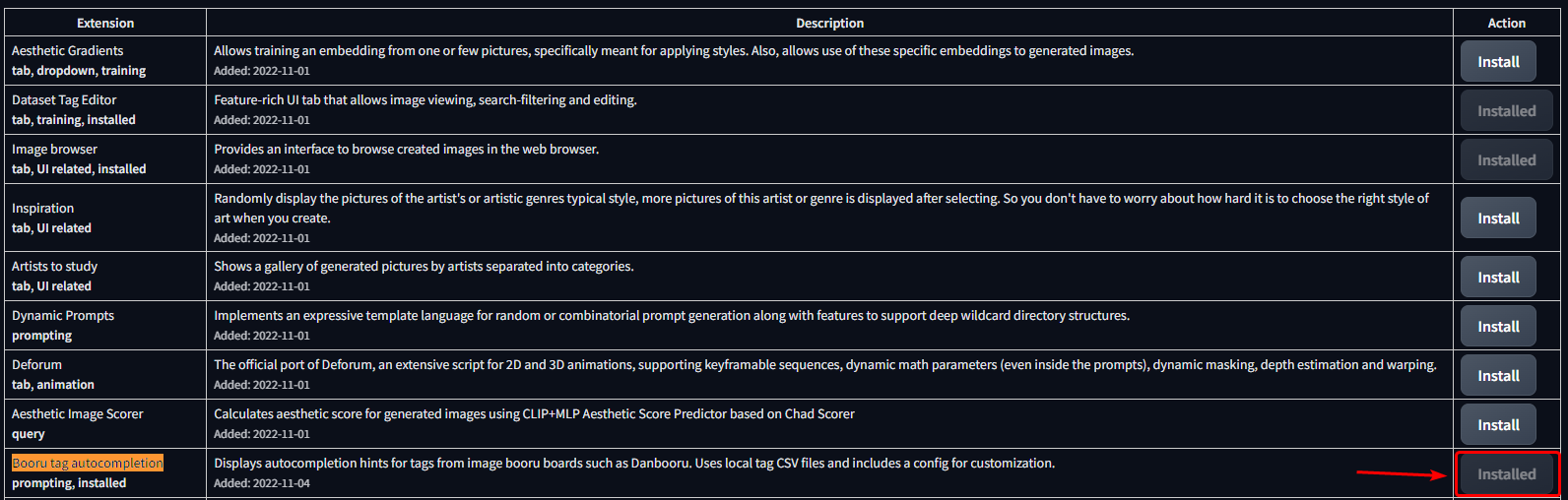

### Using the built-in extension list

|

||||

1. Open the Extensions tab

|

||||

2. Open the Available sub-tab

|

||||

3. Click "Load from:"

|

||||

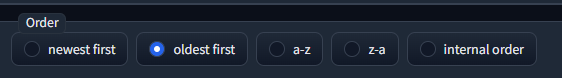

4. Find "Booru tag autocompletion" in the list

|

||||

- The extension was one of the first available, so selecting "oldest first" will show it high up in the list.

|

||||

5. Click "Install" on the right side

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Manual clone

|

||||

```bash

|

||||

git clone "https://github.com/DominikDoom/a1111-sd-webui-tagcomplete.git" extensions/tag-autocomplete

|

||||

```

|

||||

(The second argument specifies the name of the folder, you can choose whatever you like).

|

||||

|

||||

Or create a folder there manually and place the `javascript`, `scripts` and `tags` folders in it.

|

||||

## Additional completion support

|

||||

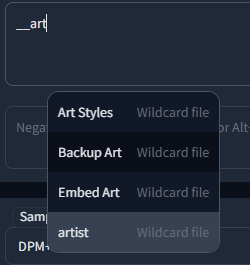

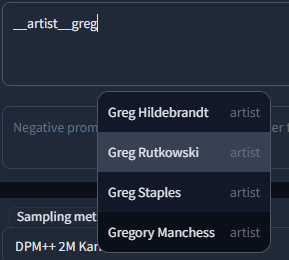

### Wildcards

|

||||

Autocompletion also works with wildcard files used by https://github.com/AUTOMATIC1111/stable-diffusion-webui-wildcards or other similar scripts/extensions.

|

||||

Completion is triggered by typing `__` (double underscore). It will first show a list of your wildcard files, and upon choosing one, the replacement options inside that file.

|

||||

This enables you to either insert categories to be replaced by the script, or directly choose one and use wildcards as a sort of categorized custom tag system.

|

||||

|

||||

### In the root folder (old)

|

||||

Copy the `javascript`, `scripts` and `tags` folder into your web UI installation root. It will run automatically the next time the web UI is started.

|

||||

|

||||

|

||||

|

||||

---

|

||||

|

||||

In both configurations, the `tags` folder contains `colors.json` and the tag data the script uses for autocompletion. By default, Danbooru and e621 tags are included.

|

||||

After scanning for embeddings and wildcards, the script will also create a `temp` directory here which lists the found files so they can be accessed in the browser side of the script. You can delete the temp folder without consequences as it will be recreated on the next startup.

|

||||

Wildcards are searched for in every extension folder, as well as the `scripts/wildcards` folder to support legacy versions. This means that you can combine wildcards from multiple extensions. Nested folders are also supported if you have grouped your wildcards in that way.

|

||||

|

||||

### Important:

|

||||

The script needs **all three folders** to work properly.

|

||||

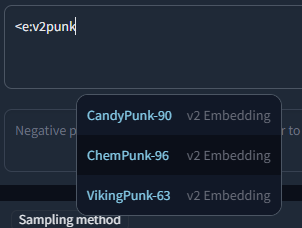

### Embeddings, Lora & Hypernets

|

||||

Completion for these three types is triggered by typing `<`. By default it will show all three mixed together, but further filtering can be done in the following way:

|

||||

- `<e:` will only show embeddings

|

||||

- `<l:` or `<lora:` will only show Lora

|

||||

- `<h:` or `<hypernet:` will only show Hypernetworks

|

||||

|

||||

## Wildcard & Embedding support

|

||||

Autocompletion also works with wildcard files used by [this script](https://github.com/jtkelm2/stable-diffusion-webui-1/blob/master/scripts/wildcards.py) of the same name or other similar scripts/extensions. This enables you to either insert categories to be replaced by the script, or even replace them with the actual wildcard file content in the same step. Wildcards are searched for in every extension folder as well as the `scripts/wildcards` folder to support legacy versions. This means that you can combine wildcards from multiple extensions. Nested folders are also supported if you have grouped your wildcards in that way.

|

||||

#### Embedding type filtering

|

||||

Embeddings trained for Stable Diffusion 1.x or 2.x models respectively are incompatible with the other type. To make it easier to find valid embeds, they are categorized by "v1 Embedding" and "v2 Embedding", including a slight color difference. You can also filter your search to include only v1 or v2 embeddings by typing `<v1/2` or `<e:v1/2` followed by the actual search term.

|

||||

|

||||

It also scans the embeddings folder and displays completion hints for the names of all .pt, .bin and .png files inside if you start typing `<`. Note that some normal tags also use < in Kaomoji (like ">_<" for example), so the results will contain both.

|

||||

For example:

|

||||

|

||||

|

||||

|

||||

### Umi AI tags

|

||||

https://github.com/Klokinator/Umi-AI is a feature-rich wildcard extension similar to Unprompted or Dynamic Wildcards.

|

||||

In recent releases, it uses YAML-based wildcard tags to enable a complex chaining system,for example `<[preset][--female][sfw][species]>` will choose the preset category, exclude female related tags, further narrow it down with the following categories, and then choose one random fill-in matching all these criteria at runtime. Completion is triggered by `<[` and then each following new unclosed bracket, e.g. `<[xyz][`, until closed by `>`.

|

||||

|

||||

Tag Autocomplete can recommend these options in a smart way, meaning while you continue to add category tags, it will only show results still matching what comes before.

|

||||

It also shows how many fill-in tags are available to choose from for that combo in place of the tag post count, enabling a quick overview and filtering of the large initial set.

|

||||

|

||||

Most of the credit goes to [@ctwrs](https://github.com/ctwrs) here, they contributed a lot as one of the Umi developers.

|

||||

|

||||

## Settings

|

||||

|

||||

@@ -78,11 +108,13 @@ The extension has a large amount of configuration & customizability built in:

|

||||

| useEmbeddings | Used to toggle the embedding completion functionality. |

|

||||

| alias | Options for aliases. More info in the section below. |

|

||||

| translation | Options for translations. More info in the section below. |

|

||||

| extras | Options for additional tag files / aliases / translations. More info in the section below. |

|

||||

|

||||

### colors.json

|

||||

Additionally, tag type colors can be specified using the separate `colors.json` file in the extension's `tags` folder.

|

||||

You can also add new ones here for custom tag files (same name as filename, without the .csv). The first value is for dark, the second for light mode. Color names and hex codes should both work.

|

||||

| extras | Options for additional tag files / aliases / translations. More info below. |

|

||||

| keymap | Customizable hotkeys. |

|

||||

| colors | Customizable tag colors. More info below. |

|

||||

### Colors

|

||||

Tag type colors can be specified by changing the JSON code in the tag autocomplete settings.

|

||||

The format is standard JSON, with the object names corresponding to the tag filenames (without the .csv) they should be used for.

|

||||

The first value in the square brackets is for dark, the second for light mode. Color names and hex codes should both work.

|

||||

```json

|

||||

{

|

||||

"danbooru": {

|

||||

@@ -106,6 +138,7 @@ You can also add new ones here for custom tag files (same name as filename, with

|

||||

}

|

||||

}

|

||||

```

|

||||

This can also be used to add new color sets for custom tag files.

|

||||

The numbers are specifying the tag type, which is dependent on the tag source. For an example, see [CSV tag data](#csv-tag-data).

|

||||

|

||||

### Aliases, Translations & Extra tags

|

||||

@@ -116,34 +149,24 @@ Like on Booru sites, tags can have one or multiple aliases which redirect to the

|

||||

|

||||

#### Translations

|

||||

An additional file can be added in the translation section, which will be used to translate both tags and aliases and also enables searching by translation.

|

||||

This file needs to be a CSV in the format `<English tag/alias>,<Translation>`, but for backwards compatibility with older extra files that used a three column format, you can turn on `oldFormat` to use that instead.

|

||||

This file needs to be a CSV in the format `<English tag/alias>,<Translation>`, but for backwards compatibility with older files that used a three column format, you can turn on `oldFormat` to use that instead.

|

||||

|

||||

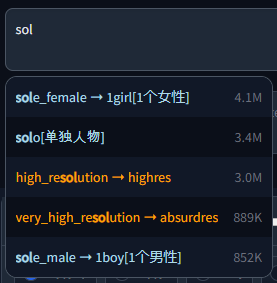

Example with chinese translation:

|

||||

|

||||

|

||||

|

||||

|

||||

**Important**

|

||||

As of a recent update, translations added in the old Extra file way will only work as an alias and not be visible anymore if typing the English tag for that translation.

|

||||

|

||||

#### Extra file

|

||||

Aliases can be added in multiple ways, which is where the "Extra" file comes into play.

|

||||

1. As an extra file containing tag, category, optional count and the new alias. Will be matched to the English tags in the main file based on the name & type, so might be slow for large files.

|

||||

2. As an extra file with `onlyAliasExtraFile` true. With this configuration, the extra file has to include *only* the alias itself. That means it is purely index based, assigning the aliases to the main tags is really fast but also needs the lines to match (including empty lines). If the order or amount in the main file changes, the translations will potentially not match anymore. Not recommended.

|

||||

An extra file can be used to add new / custom tags not included in the main set.

|

||||

The format is identical to the normal tag format shown in [CSV tag data](#csv-tag-data) below, with one exception:

|

||||

Since custom tags likely have no count, column three (or two if counting from zero) is instead used for the gray meta text displayed next to the tag.

|

||||

If left empty, it will instead show "Custom tag".

|

||||

|

||||

So your CSV values would look like this for each method:

|

||||

| | 1 | 2 |

|

||||

|------------|--------------------------|--------------------------|

|

||||

| Main file | `tag,type,count,(alias)` | `tag,type,count,(alias)` |

|

||||

| Extra file | `tag,type,(count),alias` | `alias` |

|

||||

An example with the included (very basic) extra-quality-tags.csv file:

|

||||

|

||||

Count in the extra file is optional, since there isn't always a post count for custom tag sets.

|

||||

|

||||

|

||||

The extra files can also be used to just add new / custom tags not included in the main set, provided `onlyAliasExtraFile` is false.

|

||||

If an extra tag doesn't match any existing tag, it will be added to the list as a new tag instead. For this, it will need to include the post count and alias columns even if they don't contain anything, so it could be in the form of `tag,type,,`.

|

||||

|

||||

##### WARNING

|

||||

Do not use e621.csv or danbooru.csv as an extra file. Alias comparison has exponential runtime, so for the combination of danbooru+e621, it will need to do 10,000,000,000 (yes, ten billion) lookups and usually take multiple minutes to load.

|

||||

Whether the custom tags should be added before or after the normal tags can be chosen in the settings.

|

||||

|

||||

## CSV tag data

|

||||

The script expects a CSV file with tags saved in the following way:

|

||||

|

||||

37

README_ZH.md

37

README_ZH.md

@@ -39,8 +39,8 @@ git clone "https://github.com/DominikDoom/a1111-sd-webui-tagcomplete.git" extens

|

||||

|

||||

或者手动创建一个文件夹,将 `javascript`、`scripts`和`tags`文件夹放在其中。

|

||||

|

||||

### 在根目录下(旧方法)

|

||||

只需要将`javascript`,`scripts`和`tags`文件夹复制到你的Web UI安装根目录下.下次启动Web UI时它将自动启动。

|

||||

### 在根目录下(过时的方法)

|

||||

这种安装方法适用于添加扩展系统之前的旧版webui,在目前的版本上是行不通的。

|

||||

|

||||

---

|

||||

在这两种配置中,标签文件夹包含`colors.json`和脚本用于自动完成的标签数据。

|

||||

@@ -81,9 +81,10 @@ git clone "https://github.com/DominikDoom/a1111-sd-webui-tagcomplete.git" extens

|

||||

| translation | 用于翻译标签的选项。更多信息在下面的部分。 |

|

||||

| extras | 附加标签文件/翻译的选项。更多信息在下面的部分。|

|

||||

|

||||

### colors.json (标签颜色)

|

||||

此外,标签类型的颜色可以使用扩展的`tags`文件夹中单独的`colors.json`文件来指定。

|

||||

你也可以在这里为自定义标签文件添加新的(与文件名相同,不带 .csv)。第一个值是暗模式,第二个值是亮模式。颜色名称和十六进制代码都被支持。

|

||||

### 标签颜色

|

||||

标签类型的颜色可以通过改变标签自动完成设置中的JSON代码来指定。格式是标准的JSON,对象名称对应于它们应该使用的标签文件名(没有.csv)

|

||||

|

||||

方括号中的第一个值是指深色,第二个是指浅色模式。颜色名称和十六进制代码都应该有效。

|

||||

```json

|

||||

{

|

||||

"danbooru": {

|

||||

@@ -107,7 +108,7 @@ git clone "https://github.com/DominikDoom/a1111-sd-webui-tagcomplete.git" extens

|

||||

}

|

||||

}

|

||||

```

|

||||

数字是指定标签的类型,这取决于标签的来源。例如,见[CSV tag data](#csv-tag-data)。

|

||||

这也可以用来为自定义标签文件添加新的颜色集。数字是指定标签的类型,这取决于标签来源。关于例子,见[CSV tag data](#csv-tag-data)。

|

||||

|

||||

### 别名,翻译&新增Tag

|

||||

#### 别名

|

||||

@@ -124,24 +125,20 @@ git clone "https://github.com/DominikDoom/a1111-sd-webui-tagcomplete.git" extens

|

||||

|

||||

|

||||

|

||||

**重要的是**

|

||||

从最近的更新来看,用旧的Extra文件方式添加的翻译只能作为一个别名使用,如果输入该翻译的英文标签,将不再可见。

|

||||

#### Extra文件

|

||||

额外文件可以用来添加未包含在主集中的新的/自定义标签。

|

||||

其格式与下面 [CSV tag data](#csv-tag-data) 中的正常标签格式相同,但有一个例外。

|

||||

由于自定义标签没有帖子计数,第三列(如果从零开始计算,则为第二列)用于显示标签旁边的灰色元文本。

|

||||

如果留空,它将显示 "Custom tag"。

|

||||

|

||||

可以通过多种方式添加别名,这就是额外文件发挥作用的地方。

|

||||

1. 作为仅包含已翻译标签行的额外文件(因此仍包括英文标签名称和标签类型)。将根据名称和类型与主文件中的英文标签匹配,因此对于大型翻译文件可能会很慢。

|

||||

2. 作为 `onlyAliasExtraFile` 为 true 的额外文件。使用此配置,额外文件必须包含*仅*翻译本身。这意味着它完全基于索引,将翻译分配给主要标签非常快,但也需要匹配行(包括空行)。如果主文件中的顺序或数量发生变化,则翻译可能不再匹配。

|

||||

以默认的(非常基本的)extra-quality-tags.csv为例:

|

||||

|

||||

因此,对于每种方法,您的 CSV 值将如下所示:

|

||||

| | 1 | 2 |

|

||||

|------------|--------------------------|--------------------------|

|

||||

| Main file | `tag,type,count,(alias)` | `tag,type,count,(alias)` |

|

||||

| Extra file | `tag,type,(count),alias` | `alias` |

|

||||

|

||||

|

||||

额外文件中的计数是可选的,因为自定义标签集并不总是有帖子计数。

|

||||

如果额外的标签与任何现有标签都不匹配,它将作为新标签添加到列表中。

|

||||

你可以在设置中选择自定义标签是否应该加在常规标签之前或之后。

|

||||

|

||||

### CSV tag data

|

||||

本脚本的Tag文件格式如下,你可以安装这个格式制作自己的Tag文件:

|

||||

本脚本的Tag文件格式如下,你可以安装这个格式制作自己的Tag文件:

|

||||

```csv

|

||||

1girl,0,4114588,"1girls,sole_female"

|

||||

solo,0,3426446,"female_solo,solo_female"

|

||||

@@ -151,7 +148,7 @@ commentary_request,5,2610959,

|

||||

```

|

||||

值得注意的是,不希望在第一行有列名,而且count和aliases在技术上都是可选的。

|

||||

尽管count总是包含在默认数据中。多个别名也需要用逗号分隔,但要用字符串引号包裹,以免破坏CSV解析。

|

||||

编号系统遵循 Danbooru 的 [tag API docs](https://danbooru.donmai.us/wiki_pages/api%3Atags):

|

||||

编号系统遵循 Danbooru 的 [tag API docs](https://danbooru.donmai.us/wiki_pages/api%3Atags):

|

||||

| Value | Description |

|

||||

|-------|-------------|

|

||||

|0 | General |

|

||||

|

||||

51

javascript/__globals.js

Normal file

51

javascript/__globals.js

Normal file

@@ -0,0 +1,51 @@

|

||||

// Core components

|

||||

var CFG = null;

|

||||

var tagBasePath = "";

|

||||

|

||||

// Tag completion data loaded from files

|

||||

var allTags = [];

|

||||

var translations = new Map();

|

||||

var extras = [];

|

||||

// Same for tag-likes

|

||||

var wildcardFiles = [];

|

||||

var wildcardExtFiles = [];

|

||||

var yamlWildcards = [];

|

||||

var embeddings = [];

|

||||

var hypernetworks = [];

|

||||

var loras = [];

|

||||

|

||||

// Selected model info for black/whitelisting

|

||||

var currentModelHash = "";

|

||||

var currentModelName = "";

|

||||

|

||||

// Current results

|

||||

var results = [];

|

||||

var resultCount = 0;

|

||||

|

||||

// Relevant for parsing

|

||||

var previousTags = [];

|

||||

var tagword = "";

|

||||

var originalTagword = "";

|

||||

let hideBlocked = false;

|

||||

|

||||

// Tag selection for keyboard navigation

|

||||

var selectedTag = null;

|

||||

var oldSelectedTag = null;

|

||||

|

||||

// UMI

|

||||

var umiPreviousTags = [];

|

||||

|

||||

/// Extendability system:

|

||||

/// Provides "queues" for other files of the script (or really any js)

|

||||

/// to add functions to be called at certain points in the script.

|

||||

/// Similar to a callback system, but primitive.

|

||||

|

||||

// Queues

|

||||

const QUEUE_AFTER_INSERT = [];

|

||||

const QUEUE_AFTER_SETUP = [];

|

||||

const QUEUE_FILE_LOAD = [];

|

||||

const QUEUE_AFTER_CONFIG_CHANGE = [];

|

||||

const QUEUE_SANITIZE = [];

|

||||

|

||||

// List of parsers to try

|

||||

const PARSERS = [];

|

||||

21

javascript/_baseParser.js

Normal file

21

javascript/_baseParser.js

Normal file

@@ -0,0 +1,21 @@

|

||||

class FunctionNotOverriddenError extends Error {

|

||||

constructor(message = "", ...args) {

|

||||

super(message, ...args);

|

||||

this.message = message + " is an abstract base function and must be overwritten.";

|

||||

}

|

||||

}

|

||||

|

||||

class BaseTagParser {

|

||||

triggerCondition = null;

|

||||

|

||||

constructor (triggerCondition) {

|

||||

if (new.target === BaseTagParser) {

|

||||

throw new TypeError("Cannot construct abstract BaseCompletionParser directly");

|

||||

}

|

||||

this.triggerCondition = triggerCondition;

|

||||

}

|

||||

|

||||

parse() {

|

||||

throw new FunctionNotOverriddenError("parse()");

|

||||

}

|

||||

}

|

||||

145

javascript/_caretPosition.js

Normal file

145

javascript/_caretPosition.js

Normal file

@@ -0,0 +1,145 @@

|

||||

// From https://github.com/component/textarea-caret-position

|

||||

|

||||

// We'll copy the properties below into the mirror div.

|

||||

// Note that some browsers, such as Firefox, do not concatenate properties

|

||||

// into their shorthand (e.g. padding-top, padding-bottom etc. -> padding),

|

||||

// so we have to list every single property explicitly.

|

||||

var properties = [

|

||||

'direction', // RTL support

|

||||

'boxSizing',

|

||||

'width', // on Chrome and IE, exclude the scrollbar, so the mirror div wraps exactly as the textarea does

|

||||

'height',

|

||||

'overflowX',

|

||||

'overflowY', // copy the scrollbar for IE

|

||||

|

||||

'borderTopWidth',

|

||||

'borderRightWidth',

|

||||

'borderBottomWidth',

|

||||

'borderLeftWidth',

|

||||

'borderStyle',

|

||||

|

||||

'paddingTop',

|

||||

'paddingRight',

|

||||

'paddingBottom',

|

||||

'paddingLeft',

|

||||

|

||||

// https://developer.mozilla.org/en-US/docs/Web/CSS/font

|

||||

'fontStyle',

|

||||

'fontVariant',

|

||||

'fontWeight',

|

||||

'fontStretch',

|

||||

'fontSize',

|

||||

'fontSizeAdjust',

|

||||

'lineHeight',

|

||||

'fontFamily',

|

||||

|

||||

'textAlign',

|

||||

'textTransform',

|

||||

'textIndent',

|

||||

'textDecoration', // might not make a difference, but better be safe

|

||||

|

||||

'letterSpacing',

|

||||

'wordSpacing',

|

||||

|

||||

'tabSize',

|

||||

'MozTabSize'

|

||||

|

||||

];

|

||||

|

||||

var isBrowser = (typeof window !== 'undefined');

|

||||

var isFirefox = (isBrowser && window.mozInnerScreenX != null);

|

||||

|

||||

function getCaretCoordinates(element, position, options) {

|

||||

if (!isBrowser) {

|

||||

throw new Error('textarea-caret-position#getCaretCoordinates should only be called in a browser');

|

||||

}

|

||||

|

||||

var debug = options && options.debug || false;

|

||||

if (debug) {

|

||||

var el = document.querySelector('#input-textarea-caret-position-mirror-div');

|

||||

if (el) el.parentNode.removeChild(el);

|

||||

}

|

||||

|

||||

// The mirror div will replicate the textarea's style

|

||||

var div = document.createElement('div');

|

||||

div.id = 'input-textarea-caret-position-mirror-div';

|

||||

document.body.appendChild(div);

|

||||

|

||||

var style = div.style;

|

||||

var computed = window.getComputedStyle ? window.getComputedStyle(element) : element.currentStyle; // currentStyle for IE < 9

|

||||

var isInput = element.nodeName === 'INPUT';

|

||||

|

||||

// Default textarea styles

|

||||

style.whiteSpace = 'pre-wrap';

|

||||

if (!isInput)

|

||||

style.wordWrap = 'break-word'; // only for textarea-s

|

||||

|

||||

// Position off-screen

|

||||

style.position = 'absolute'; // required to return coordinates properly

|

||||

if (!debug)

|

||||

style.visibility = 'hidden'; // not 'display: none' because we want rendering

|

||||

|

||||

// Transfer the element's properties to the div

|

||||

properties.forEach(function (prop) {

|

||||

if (isInput && prop === 'lineHeight') {

|

||||

// Special case for <input>s because text is rendered centered and line height may be != height

|

||||

if (computed.boxSizing === "border-box") {

|

||||

var height = parseInt(computed.height);

|

||||

var outerHeight =

|

||||

parseInt(computed.paddingTop) +

|

||||

parseInt(computed.paddingBottom) +

|

||||

parseInt(computed.borderTopWidth) +

|

||||

parseInt(computed.borderBottomWidth);

|

||||

var targetHeight = outerHeight + parseInt(computed.lineHeight);

|

||||

if (height > targetHeight) {

|

||||

style.lineHeight = height - outerHeight + "px";

|

||||

} else if (height === targetHeight) {

|

||||

style.lineHeight = computed.lineHeight;

|

||||

} else {

|

||||

style.lineHeight = 0;

|

||||

}

|

||||

} else {

|

||||

style.lineHeight = computed.height;

|

||||

}

|

||||

} else {

|

||||

style[prop] = computed[prop];

|

||||

}

|

||||

});

|

||||

|

||||

if (isFirefox) {

|

||||

// Firefox lies about the overflow property for textareas: https://bugzilla.mozilla.org/show_bug.cgi?id=984275

|

||||

if (element.scrollHeight > parseInt(computed.height))

|

||||

style.overflowY = 'scroll';

|

||||

} else {

|

||||

style.overflow = 'hidden'; // for Chrome to not render a scrollbar; IE keeps overflowY = 'scroll'

|

||||

}

|

||||

|

||||

div.textContent = element.value.substring(0, position);

|

||||

// The second special handling for input type="text" vs textarea:

|

||||

// spaces need to be replaced with non-breaking spaces - http://stackoverflow.com/a/13402035/1269037

|

||||

if (isInput)

|

||||

div.textContent = div.textContent.replace(/\s/g, '\u00a0');

|

||||

|

||||

var span = document.createElement('span');

|

||||

// Wrapping must be replicated *exactly*, including when a long word gets

|

||||

// onto the next line, with whitespace at the end of the line before (#7).

|

||||

// The *only* reliable way to do that is to copy the *entire* rest of the

|

||||

// textarea's content into the <span> created at the caret position.

|

||||

// For inputs, just '.' would be enough, but no need to bother.

|

||||

span.textContent = element.value.substring(position) || '.'; // || because a completely empty faux span doesn't render at all

|

||||

div.appendChild(span);

|

||||

|

||||

var coordinates = {

|

||||

top: span.offsetTop + parseInt(computed['borderTopWidth']),

|

||||

left: span.offsetLeft + parseInt(computed['borderLeftWidth']),

|

||||

height: parseInt(computed['lineHeight'])

|

||||

};

|

||||

|

||||

if (debug) {

|

||||

span.style.backgroundColor = '#aaa';

|

||||

} else {

|

||||

document.body.removeChild(div);

|

||||

}

|

||||

|

||||

return coordinates;

|

||||

}

|

||||

@@ -3,10 +3,13 @@

|

||||

// Type enum

|

||||

const ResultType = Object.freeze({

|

||||

"tag": 1,

|

||||

"embedding": 2,

|

||||

"wildcardTag": 3,

|

||||

"wildcardFile": 4,

|

||||

"yamlWildcard": 5

|

||||

"extra": 2,

|

||||

"embedding": 3,

|

||||

"wildcardTag": 4,

|

||||

"wildcardFile": 5,

|

||||

"yamlWildcard": 6,

|

||||

"hypernetwork": 7,

|

||||

"lora": 8

|

||||

});

|

||||

|

||||

// Class to hold result data and annotations to make it clearer to use

|

||||

|

||||

@@ -38,7 +38,10 @@ function parseCSV(str) {

|

||||

}

|

||||

|

||||

// Load file

|

||||

async function readFile(filePath, json = false) {

|

||||

async function readFile(filePath, json = false, cache = false) {

|

||||

if (!cache)

|

||||

filePath += `?${new Date().getTime()}`;

|

||||

|

||||

let response = await fetch(`file=${filePath}`);

|

||||

|

||||

if (response.status != 200) {

|

||||

@@ -93,4 +96,35 @@ function escapeHTML(unsafeText) {

|

||||

let div = document.createElement('div');

|

||||

div.textContent = unsafeText;

|

||||

return div.innerHTML;

|

||||

}

|

||||

|

||||

// Queue calling function to process global queues

|

||||

async function processQueue(queue, context, ...args) {

|

||||

for (let i = 0; i < queue.length; i++) {

|

||||

await queue[i].call(context, ...args);

|

||||

}

|

||||

}

|

||||

// The same but with return values

|

||||

async function processQueueReturn(queue, context, ...args)

|

||||

{

|

||||

let qeueueReturns = [];

|

||||

for (let i = 0; i < queue.length; i++) {

|

||||

let returnValue = await queue[i].call(context, ...args);

|

||||

if (returnValue)

|

||||

qeueueReturns.push(returnValue);

|

||||

}

|

||||

return qeueueReturns;

|

||||

}

|

||||

// Specific to tag completion parsers

|

||||

async function processParsers(textArea, prompt) {

|

||||

// Get all parsers that have a successful trigger condition

|

||||

let matchingParsers = PARSERS.filter(parser => parser.triggerCondition());

|

||||

// Guard condition

|

||||

if (matchingParsers.length === 0) {

|

||||

return null;

|

||||

}

|

||||

|

||||

let parseFunctions = matchingParsers.map(parser => parser.parse);

|

||||

// Process them and return the results

|

||||

return await processQueueReturn(parseFunctions, null, textArea, prompt);

|

||||

}

|

||||

61

javascript/ext_embeddings.js

Normal file

61

javascript/ext_embeddings.js

Normal file

@@ -0,0 +1,61 @@

|

||||

const EMB_REGEX = /<(?!l:|h:)[^,> ]*>?/g;

|

||||

const EMB_TRIGGER = () => CFG.useEmbeddings && tagword.match(EMB_REGEX);

|

||||

|

||||

class EmbeddingParser extends BaseTagParser {

|

||||

parse() {

|

||||

// Show embeddings

|

||||

let tempResults = [];

|

||||

if (tagword !== "<" && tagword !== "<e:") {

|

||||

let searchTerm = tagword.replace("<e:", "").replace("<", "");

|

||||

let versionString;

|

||||

if (searchTerm.startsWith("v1") || searchTerm.startsWith("v2")) {

|

||||

versionString = searchTerm.slice(0, 2);

|

||||

searchTerm = searchTerm.slice(2);

|

||||

}

|

||||

|

||||

let filterCondition = x => x[0].toLowerCase().includes(searchTerm) || x[0].toLowerCase().replaceAll(" ", "_").includes(searchTerm);

|

||||

|

||||

if (versionString)

|

||||

tempResults = embeddings.filter(x => filterCondition(x) && x[1] && x[1] === versionString); // Filter by tagword

|

||||

else

|

||||

tempResults = embeddings.filter(x => filterCondition(x)); // Filter by tagword

|

||||

} else {

|

||||

tempResults = embeddings;

|

||||

}

|

||||

|

||||

// Add final results

|

||||

let finalResults = [];

|

||||

tempResults.forEach(t => {

|

||||

let result = new AutocompleteResult(t[0].trim(), ResultType.embedding)

|

||||

result.meta = t[1] + " Embedding";

|

||||

finalResults.push(result);

|

||||

});

|

||||

|

||||

return finalResults;

|

||||

}

|

||||

}

|

||||

|

||||

async function load() {

|

||||

if (embeddings.length === 0) {

|

||||

try {

|

||||

embeddings = (await readFile(`${tagBasePath}/temp/emb.txt`)).split("\n")

|

||||

.filter(x => x.trim().length > 0) // Remove empty lines

|

||||

.map(x => x.trim().split(",")); // Split into name, version type pairs

|

||||

} catch (e) {

|

||||

console.error("Error loading embeddings.txt: " + e);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

function sanitize(tagType, text) {

|

||||

if (tagType === ResultType.embedding) {

|

||||

return text.replace(/^.*?: /g, "");

|

||||

}

|

||||

return null;

|

||||

}

|

||||

|

||||

PARSERS.push(new EmbeddingParser(EMB_TRIGGER));

|

||||

|

||||

// Add our utility functions to their respective queues

|

||||

QUEUE_FILE_LOAD.push(load);

|

||||

QUEUE_SANITIZE.push(sanitize);

|

||||

51

javascript/ext_hypernets.js

Normal file

51

javascript/ext_hypernets.js

Normal file

@@ -0,0 +1,51 @@

|

||||

const HYP_REGEX = /<(?!e:|l:)[^,> ]*>?/g;

|

||||

const HYP_TRIGGER = () => CFG.useHypernetworks && tagword.match(HYP_REGEX);

|

||||

|

||||

class HypernetParser extends BaseTagParser {

|

||||

parse() {

|

||||

// Show hypernetworks

|

||||

let tempResults = [];

|

||||

if (tagword !== "<" && tagword !== "<h:" && tagword !== "<hypernet:") {

|

||||

let searchTerm = tagword.replace("<hypernet:", "").replace("<h:", "").replace("<", "");

|

||||

let filterCondition = x => x.toLowerCase().includes(searchTerm) || x.toLowerCase().replaceAll(" ", "_").includes(searchTerm);

|

||||

tempResults = hypernetworks.filter(x => filterCondition(x)); // Filter by tagword

|

||||

} else {

|

||||

tempResults = hypernetworks;

|

||||

}

|

||||

|

||||

// Add final results

|

||||

let finalResults = [];

|

||||

tempResults.forEach(t => {

|

||||

let result = new AutocompleteResult(t.trim(), ResultType.hypernetwork)

|

||||

result.meta = "Hypernetwork";

|

||||

finalResults.push(result);

|

||||

});

|

||||

|

||||

return finalResults;

|

||||

}

|

||||

}

|

||||

|

||||

async function load() {

|

||||

if (hypernetworks.length === 0) {

|

||||

try {

|

||||

hypernetworks = (await readFile(`${tagBasePath}/temp/hyp.txt`)).split("\n")

|

||||

.filter(x => x.trim().length > 0) //Remove empty lines

|

||||

.map(x => x.trim()); // Remove carriage returns and padding if it exists

|

||||

} catch (e) {

|

||||

console.error("Error loading hypernetworks.txt: " + e);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

function sanitize(tagType, text) {

|

||||

if (tagType === ResultType.hypernetwork) {

|

||||

return `<hypernet:${text}:${CFG.extraNetworksDefaultMultiplier}>`;

|

||||

}

|

||||

return null;

|

||||

}

|

||||

|

||||

PARSERS.push(new HypernetParser(HYP_TRIGGER));

|

||||

|

||||

// Add our utility functions to their respective queues

|

||||

QUEUE_FILE_LOAD.push(load);

|

||||

QUEUE_SANITIZE.push(sanitize);

|

||||

51

javascript/ext_loras.js

Normal file

51

javascript/ext_loras.js

Normal file

@@ -0,0 +1,51 @@

|

||||

const LORA_REGEX = /<(?!e:|h:)[^,> ]*>?/g;

|

||||

const LORA_TRIGGER = () => CFG.useLoras && tagword.match(LORA_REGEX);

|

||||

|

||||

class LoraParser extends BaseTagParser {

|

||||

parse() {

|

||||

// Show lora

|

||||

let tempResults = [];

|

||||

if (tagword !== "<" && tagword !== "<l:" && tagword !== "<lora:") {

|

||||

let searchTerm = tagword.replace("<lora:", "").replace("<l:", "").replace("<", "");

|

||||

let filterCondition = x => x.toLowerCase().includes(searchTerm) || x.toLowerCase().replaceAll(" ", "_").includes(searchTerm);

|

||||

tempResults = loras.filter(x => filterCondition(x)); // Filter by tagword

|

||||

} else {

|

||||

tempResults = loras;

|

||||

}

|

||||

|

||||

// Add final results

|

||||

let finalResults = [];

|

||||

tempResults.forEach(t => {

|

||||

let result = new AutocompleteResult(t.trim(), ResultType.lora)

|

||||

result.meta = "Lora";

|

||||

finalResults.push(result);

|

||||

});

|

||||

|

||||

return finalResults;

|

||||

}

|

||||

}

|

||||

|

||||

async function load() {

|

||||

if (loras.length === 0) {

|

||||

try {

|

||||

loras = (await readFile(`${tagBasePath}/temp/lora.txt`)).split("\n")

|

||||

.filter(x => x.trim().length > 0) // Remove empty lines

|

||||

.map(x => x.trim()); // Remove carriage returns and padding if it exists

|

||||

} catch (e) {

|

||||

console.error("Error loading lora.txt: " + e);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

function sanitize(tagType, text) {

|

||||

if (tagType === ResultType.lora) {

|

||||

return `<lora:${text}:${CFG.extraNetworksDefaultMultiplier}>`;

|

||||

}

|

||||

return null;

|

||||

}

|

||||

|

||||

PARSERS.push(new LoraParser(LORA_TRIGGER));

|

||||

|

||||

// Add our utility functions to their respective queues

|

||||

QUEUE_FILE_LOAD.push(load);

|

||||

QUEUE_SANITIZE.push(sanitize);

|

||||

240

javascript/ext_umi.js

Normal file

240

javascript/ext_umi.js

Normal file

@@ -0,0 +1,240 @@

|

||||

const UMI_PROMPT_REGEX = /<[^\s]*?\[[^,<>]*[\]|]?>?/gi;

|

||||

const UMI_TAG_REGEX = /(?:\[|\||--)([^<>\[\]\-|]+)/gi;

|

||||

|

||||

const UMI_TRIGGER = () => CFG.useWildcards && [...tagword.matchAll(UMI_PROMPT_REGEX)].length > 0;

|

||||

|

||||

class UmiParser extends BaseTagParser {

|

||||

parse(textArea, prompt) {

|

||||

// We are in a UMI yaml tag definition, parse further

|

||||

let umiSubPrompts = [...prompt.matchAll(UMI_PROMPT_REGEX)];

|

||||

|

||||

let umiTags = [];

|

||||

let umiTagsWithOperators = []

|

||||

|

||||

const insertAt = (str,char,pos) => str.slice(0,pos) + char + str.slice(pos);

|

||||

|

||||

umiSubPrompts.forEach(umiSubPrompt => {

|

||||

umiTags = umiTags.concat([...umiSubPrompt[0].matchAll(UMI_TAG_REGEX)].map(x => x[1].toLowerCase()));

|

||||

|

||||

const start = umiSubPrompt.index;

|

||||

const end = umiSubPrompt.index + umiSubPrompt[0].length;

|

||||

if (textArea.selectionStart >= start && textArea.selectionStart <= end) {

|

||||

umiTagsWithOperators = insertAt(umiSubPrompt[0], '###', textArea.selectionStart - start);

|

||||

}

|

||||

});

|

||||

|

||||

// Safety check since UMI parsing sometimes seems to trigger outside of an UMI subprompt and thus fails

|

||||

if (umiTagsWithOperators.length === 0) {

|

||||

return null;

|

||||

}

|

||||

|

||||

const promptSplitToTags = umiTagsWithOperators.replace(']###[', '][').split("][");

|

||||

|

||||

const clean = (str) => str

|

||||

.replaceAll('>', '')

|

||||

.replaceAll('<', '')

|

||||

.replaceAll('[', '')

|

||||

.replaceAll(']', '')

|

||||

.trim();

|

||||

|

||||

const matches = promptSplitToTags.reduce((acc, curr) => {

|

||||

let isOptional = curr.includes("|");

|

||||

let isNegative = curr.startsWith("--");

|

||||

let out;

|

||||

if (isOptional) {

|

||||

out = {

|

||||

hasCursor: curr.includes("###"),

|

||||

tags: clean(curr).split('|').map(x => ({

|

||||

hasCursor: x.includes("###"),

|

||||

isNegative: x.startsWith("--"),

|

||||

tag: clean(x).replaceAll("###", '').replaceAll("--", '')

|

||||

}))

|

||||

};

|

||||

acc.optional.push(out);

|

||||

acc.all.push(...out.tags.map(x => x.tag));

|

||||

} else if (isNegative) {

|

||||

out = {

|

||||

hasCursor: curr.includes("###"),

|

||||

tags: clean(curr).replaceAll("###", '').split('|'),

|

||||

};

|

||||

out.tags = out.tags.map(x => x.startsWith("--") ? x.substring(2) : x);

|

||||

acc.negative.push(out);

|

||||

acc.all.push(...out.tags);

|

||||

} else {

|

||||

out = {

|

||||

hasCursor: curr.includes("###"),

|

||||

tags: clean(curr).replaceAll("###", '').split('|'),

|

||||

};

|

||||

acc.positive.push(out);

|

||||

acc.all.push(...out.tags);

|

||||

}

|

||||

return acc;

|

||||

}, { positive: [], negative: [], optional: [], all: [] });

|

||||

|

||||

//console.log({ matches })

|

||||

|

||||

const filteredWildcards = (tagword) => {

|

||||

const wildcards = yamlWildcards.filter(x => {

|

||||

let tags = x[1];

|

||||

const matchesNeg =

|

||||

matches.negative.length === 0

|

||||

|| matches.negative.every(x =>

|

||||

x.hasCursor

|

||||

|| x.tags.every(t => !tags[t])

|

||||

);

|

||||

if (!matchesNeg) return false;

|

||||

const matchesPos =

|

||||

matches.positive.length === 0

|

||||

|| matches.positive.every(x =>

|

||||

x.hasCursor

|

||||

|| x.tags.every(t => tags[t])

|

||||

);

|

||||

if (!matchesPos) return false;

|

||||

const matchesOpt =

|

||||

matches.optional.length === 0

|

||||

|| matches.optional.some(x =>

|

||||

x.tags.some(t =>

|

||||

t.hasCursor

|

||||

|| t.isNegative

|

||||

? !tags[t.tag]

|

||||

: tags[t.tag]

|

||||

));

|

||||

if (!matchesOpt) return false;

|

||||

return true;

|

||||

}).reduce((acc, val) => {

|

||||

Object.keys(val[1]).forEach(tag => acc[tag] = acc[tag] + 1 || 1);

|

||||

return acc;

|

||||

}, {});

|

||||

|

||||

return Object.entries(wildcards)

|

||||

.sort((a, b) => b[1] - a[1])

|

||||

.filter(x =>

|

||||

x[0] === tagword

|

||||

|| !matches.all.includes(x[0])

|

||||

);

|

||||

}

|

||||

|

||||

if (umiTags.length > 0) {

|

||||

// Get difference for subprompt

|

||||

let tagCountChange = umiTags.length - umiPreviousTags.length;

|

||||

let diff = difference(umiTags, umiPreviousTags);

|

||||

umiPreviousTags = umiTags;

|

||||

|

||||

// Show all condition

|

||||

let showAll = tagword.endsWith("[") || tagword.endsWith("[--") || tagword.endsWith("|");

|

||||

|

||||

// Exit early if the user closed the bracket manually

|

||||

if ((!diff || diff.length === 0 || (diff.length === 1 && tagCountChange < 0)) && !showAll) {

|

||||

if (!hideBlocked) hideResults(textArea);

|

||||

return;

|

||||

}

|

||||

|

||||

let umiTagword = diff[0] || '';

|

||||

let tempResults = [];

|

||||

if (umiTagword && umiTagword.length > 0) {

|

||||

umiTagword = umiTagword.toLowerCase().replace(/[\n\r]/g, "");

|

||||

originalTagword = tagword;

|

||||

tagword = umiTagword;

|

||||

let filteredWildcardsSorted = filteredWildcards(umiTagword);

|

||||

let searchRegex = new RegExp(`(^|[^a-zA-Z])${escapeRegExp(umiTagword)}`, 'i')

|

||||

let baseFilter = x => x[0].toLowerCase().search(searchRegex) > -1;

|

||||

let spaceIncludeFilter = x => x[0].toLowerCase().replaceAll(" ", "_").search(searchRegex) > -1;

|

||||

tempResults = filteredWildcardsSorted.filter(x => baseFilter(x) || spaceIncludeFilter(x)) // Filter by tagword

|

||||

|

||||

// Add final results

|

||||

let finalResults = [];

|

||||

tempResults.forEach(t => {

|

||||

let result = new AutocompleteResult(t[0].trim(), ResultType.yamlWildcard)

|

||||

result.count = t[1];

|

||||

finalResults.push(result);

|

||||

});

|

||||

|

||||

return finalResults;

|

||||

} else if (showAll) {

|

||||

let filteredWildcardsSorted = filteredWildcards("");

|

||||

|

||||

// Add final results

|

||||

let finalResults = [];

|

||||

filteredWildcardsSorted.forEach(t => {

|

||||

let result = new AutocompleteResult(t[0].trim(), ResultType.yamlWildcard)

|

||||

result.count = t[1];

|

||||

finalResults.push(result);

|

||||

});

|

||||

|

||||

originalTagword = tagword;

|

||||

tagword = "";

|

||||

return finalResults;

|

||||

}

|

||||

} else {

|

||||

let filteredWildcardsSorted = filteredWildcards("");

|

||||

|

||||

// Add final results

|

||||

let finalResults = [];

|

||||

filteredWildcardsSorted.forEach(t => {

|

||||

let result = new AutocompleteResult(t[0].trim(), ResultType.yamlWildcard)

|

||||

result.count = t[1];

|

||||

finalResults.push(result);

|

||||

});

|

||||

|

||||

originalTagword = tagword;

|

||||

tagword = "";

|

||||

return finalResults;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

function updateUmiTags( tagType, sanitizedText, newPrompt, textArea) {

|

||||

// If it was a yaml wildcard, also update the umiPreviousTags

|

||||

if (tagType === ResultType.yamlWildcard && originalTagword.length > 0) {

|

||||

let umiSubPrompts = [...newPrompt.matchAll(UMI_PROMPT_REGEX)];

|

||||

|

||||

let umiTags = [];

|

||||

umiSubPrompts.forEach(umiSubPrompt => {

|

||||

umiTags = umiTags.concat([...umiSubPrompt[0].matchAll(UMI_TAG_REGEX)].map(x => x[1].toLowerCase()));

|

||||

});

|

||||

|

||||

umiPreviousTags = umiTags;

|

||||

|

||||

hideResults(textArea);

|

||||

|

||||

return true;

|

||||

}

|

||||

return false;

|

||||

}

|

||||

|

||||

async function load() {

|

||||

if (yamlWildcards.length === 0) {

|

||||

try {

|

||||

let yamlTags = (await readFile(`${tagBasePath}/temp/wcet.txt`)).split("\n");

|

||||

// Split into tag, count pairs

|

||||

yamlWildcards = yamlTags.map(x => x

|

||||

.trim()

|

||||

.split(","))

|

||||

.map(([i, ...rest]) => [

|

||||

i,

|

||||

rest.reduce((a, b) => {

|

||||

a[b.toLowerCase()] = true;

|

||||

return a;

|

||||

}, {}),

|

||||

]);

|

||||

} catch (e) {

|

||||

console.error("Error loading yaml wildcards: " + e);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

function sanitize(tagType, text) {

|

||||

// Replace underscores only if the yaml tag is not using them

|

||||

if (tagType === ResultType.yamlWildcard && !yamlWildcards.includes(text)) {

|

||||

return text.replaceAll("_", " ");

|

||||

}

|

||||

return null;

|

||||

}

|

||||

|

||||

// Add UMI parser

|

||||

PARSERS.push(new UmiParser(UMI_TRIGGER));

|

||||

|

||||

// Add our utility functions to their respective queues

|

||||

QUEUE_FILE_LOAD.push(load);

|

||||

QUEUE_SANITIZE.push(sanitize);

|

||||

QUEUE_AFTER_INSERT.push(updateUmiTags);

|

||||

123

javascript/ext_wildcards.js

Normal file

123

javascript/ext_wildcards.js

Normal file

@@ -0,0 +1,123 @@

|

||||

// Regex

|

||||

const WC_REGEX = /\b__([^,]+)__([^, ]*)\b/g;

|

||||

|

||||

// Trigger conditions

|

||||

const WC_TRIGGER = () => CFG.useWildcards && [...tagword.matchAll(WC_REGEX)].length > 0;

|

||||

const WC_FILE_TRIGGER = () => CFG.useWildcards && (tagword.startsWith("__") && !tagword.endsWith("__") || tagword === "__");

|

||||

|

||||

class WildcardParser extends BaseTagParser {

|

||||

async parse() {

|

||||

// Show wildcards from a file with that name

|

||||

let wcMatch = [...tagword.matchAll(WC_REGEX)]

|

||||

let wcFile = wcMatch[0][1];

|

||||

let wcWord = wcMatch[0][2];

|

||||

|

||||

// Look in normal wildcard files

|

||||

let wcFound = wildcardFiles.find(x => x[1].toLowerCase() === wcFile);

|

||||

// Use found wildcard file or look in external wildcard files

|

||||

let wcPair = wcFound || wildcardExtFiles.find(x => x[1].toLowerCase() === wcFile);

|

||||

|

||||

let wildcards = (await readFile(`${wcPair[0]}/${wcPair[1]}.txt`)).split("\n")

|

||||

.filter(x => x.trim().length > 0 && !x.startsWith('#')); // Remove empty lines and comments

|

||||

|

||||

let finalResults = [];

|

||||

let tempResults = wildcards.filter(x => (wcWord !== null && wcWord.length > 0) ? x.toLowerCase().includes(wcWord) : x) // Filter by tagword

|

||||

tempResults.forEach(t => {

|

||||

let result = new AutocompleteResult(t.trim(), ResultType.wildcardTag);

|

||||

result.meta = wcFile;

|

||||

finalResults.push(result);

|

||||

});

|

||||

|

||||

return finalResults;

|

||||

}

|

||||

}

|

||||

|

||||

class WildcardFileParser extends BaseTagParser {

|

||||

parse() {

|

||||

// Show available wildcard files

|

||||

let tempResults = [];

|

||||

if (tagword !== "__") {

|

||||

let lmb = (x) => x[1].toLowerCase().includes(tagword.replace("__", ""))

|

||||

tempResults = wildcardFiles.filter(lmb).concat(wildcardExtFiles.filter(lmb)) // Filter by tagword

|

||||

} else {

|

||||

tempResults = wildcardFiles.concat(wildcardExtFiles);

|

||||

}

|

||||

|

||||

let finalResults = [];

|

||||

// Get final results

|

||||

tempResults.forEach(wcFile => {

|

||||

let result = new AutocompleteResult(wcFile[1].trim(), ResultType.wildcardFile);

|

||||

result.meta = "Wildcard file";

|

||||

finalResults.push(result);

|

||||

});

|

||||

|

||||

return finalResults;

|

||||

}

|

||||

}

|

||||

|

||||

async function load() {

|

||||

if (wildcardFiles.length === 0 && wildcardExtFiles.length === 0) {

|

||||

try {

|

||||

let wcFileArr = (await readFile(`${tagBasePath}/temp/wc.txt`)).split("\n");

|

||||

let wcBasePath = wcFileArr[0].trim(); // First line should be the base path

|

||||

wildcardFiles = wcFileArr.slice(1)

|

||||

.filter(x => x.trim().length > 0) // Remove empty lines

|

||||

.map(x => [wcBasePath, x.trim().replace(".txt", "")]); // Remove file extension & newlines

|

||||

|

||||

// To support multiple sources, we need to separate them using the provided "-----" strings

|

||||

let wcExtFileArr = (await readFile(`${tagBasePath}/temp/wce.txt`)).split("\n");

|

||||

let splitIndices = [];

|

||||

for (let index = 0; index < wcExtFileArr.length; index++) {

|

||||

if (wcExtFileArr[index].trim() === "-----") {

|

||||

splitIndices.push(index);

|

||||

}

|

||||

}

|

||||

// For each group, add them to the wildcardFiles array with the base path as the first element

|

||||

for (let i = 0; i < splitIndices.length; i++) {

|

||||

let start = splitIndices[i - 1] || 0;

|

||||

if (i > 0) start++; // Skip the "-----" line

|

||||

let end = splitIndices[i];

|

||||

|

||||

let wcExtFile = wcExtFileArr.slice(start, end);

|

||||

let base = wcExtFile[0].trim() + "/";

|

||||

wcExtFile = wcExtFile.slice(1)

|

||||

.filter(x => x.trim().length > 0) // Remove empty lines

|

||||

.map(x => x.trim().replace(base, "").replace(".txt", "")); // Remove file extension & newlines;

|

||||

|

||||

wcExtFile = wcExtFile.map(x => [base, x]);

|

||||

wildcardExtFiles.push(...wcExtFile);

|

||||

}

|

||||

} catch (e) {

|

||||

console.error("Error loading wildcards: " + e);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

function sanitize(tagType, text) {

|

||||

if (tagType === ResultType.wildcardFile) {

|

||||

return `__${text}__`;

|

||||

} else if (tagType === ResultType.wildcardTag) {

|

||||

return text.replace(/^.*?: /g, "");

|

||||

}

|

||||

return null;

|

||||

}

|

||||

|

||||

function keepOpenIfWildcard(tagType, sanitizedText, newPrompt, textArea) {

|

||||

// If it's a wildcard, we want to keep the results open so the user can select another wildcard

|

||||

if (tagType === ResultType.wildcardFile) {

|

||||

hideBlocked = true;

|

||||

autocomplete(textArea, newPrompt, sanitizedText);

|

||||

setTimeout(() => { hideBlocked = false; }, 100);

|

||||

return true;

|

||||

}

|

||||

return false;

|

||||

}

|

||||

|

||||

// Register the parsers

|

||||

PARSERS.push(new WildcardParser(WC_TRIGGER));

|

||||

PARSERS.push(new WildcardFileParser(WC_FILE_TRIGGER));

|

||||

|

||||

// Add our utility functions to their respective queues

|

||||

QUEUE_FILE_LOAD.push(load);

|

||||

QUEUE_SANITIZE.push(sanitize);

|

||||

QUEUE_AFTER_INSERT.push(keepOpenIfWildcard);

|

||||

File diff suppressed because it is too large

Load Diff

@@ -5,14 +5,19 @@ import gradio as gr

|

||||

from pathlib import Path

|

||||

from modules import scripts, script_callbacks, shared, sd_hijack

|

||||

import yaml

|

||||

import time

|

||||

import threading

|

||||

|

||||

# Webui root path

|

||||

FILE_DIR = Path().absolute()

|

||||

try:

|

||||

from modules.paths import script_path, extensions_dir

|

||||

# Webui root path

|

||||

FILE_DIR = Path(script_path)

|

||||

|

||||

# The extension base path

|

||||

EXT_PATH = FILE_DIR.joinpath('extensions')

|

||||

# The extension base path

|

||||

EXT_PATH = Path(extensions_dir)

|

||||

except ImportError:

|

||||

# Webui root path

|

||||

FILE_DIR = Path().absolute()

|

||||

# The extension base path

|

||||

EXT_PATH = FILE_DIR.joinpath('extensions')

|

||||

|

||||

# Tags base path

|

||||

TAGS_PATH = Path(scripts.basedir()).joinpath('tags')

|

||||

@@ -20,7 +25,12 @@ TAGS_PATH = Path(scripts.basedir()).joinpath('tags')

|

||||

# The path to the folder containing the wildcards and embeddings

|

||||

WILDCARD_PATH = FILE_DIR.joinpath('scripts/wildcards')

|

||||

EMB_PATH = Path(shared.cmd_opts.embeddings_dir)

|

||||

HYP_PATH = Path(shared.cmd_opts.hypernetwork_dir)

|

||||

|

||||

try:

|

||||

LORA_PATH = Path(shared.cmd_opts.lora_dir)

|

||||

except AttributeError:

|

||||

LORA_PATH = None

|

||||

|

||||

def find_ext_wildcard_paths():

|

||||

"""Returns the path to the extension wildcards folder"""

|

||||

@@ -69,8 +79,11 @@ def get_ext_wildcard_tags():

|

||||

with open(path, encoding="utf8") as file:

|

||||

data = yaml.safe_load(file)

|

||||

for item in data:

|

||||

wildcard_tags[count] = ','.join(data[item]['Tags'])

|

||||

count += 1

|

||||

if data[item] and 'Tags' in data[item]:

|

||||

wildcard_tags[count] = ','.join(data[item]['Tags'])

|

||||

count += 1

|

||||

else:

|

||||

print('Issue with tags found in ' + path.name + ' at item ' + item)

|

||||

except yaml.YAMLError as exc:

|

||||

print(exc)

|

||||

# Sort by count

|

||||

@@ -137,6 +150,22 @@ def get_embeddings(sd_model):

|

||||

|

||||

write_to_temp_file('emb.txt', results)

|

||||

|

||||

def get_hypernetworks():

|

||||

"""Write a list of all hypernetworks"""

|

||||

|

||||

# Get a list of all hypernetworks in the folder

|

||||

all_hypernetworks = [str(h.name) for h in HYP_PATH.rglob("*") if h.suffix in {".pt"}]

|

||||

# Remove file extensions

|

||||

return sorted([h[:h.rfind('.')] for h in all_hypernetworks], key=lambda x: x.lower())

|

||||

|

||||

def get_lora():

|

||||

"""Write a list of all lora"""

|

||||

|

||||

# Get a list of all lora in the folder

|

||||

all_lora = [str(l.name) for l in LORA_PATH.rglob("*") if l.suffix in {".safetensors", ".ckpt", ".pt"}]

|

||||

# Remove file extensions

|

||||

return sorted([l[:l.rfind('.')] for l in all_lora], key=lambda x: x.lower())

|

||||

|

||||

|

||||

def write_tag_base_path():

|

||||

"""Writes the tag base path to a fixed location temporary file"""

|

||||

@@ -178,6 +207,8 @@ if not TEMP_PATH.exists():

|

||||

write_to_temp_file('wc.txt', [])

|

||||

write_to_temp_file('wce.txt', [])

|

||||

write_to_temp_file('wcet.txt', [])

|

||||

write_to_temp_file('hyp.txt', [])

|

||||

write_to_temp_file('lora.txt', [])

|

||||

# Only reload embeddings if the file doesn't exist, since they are already re-written on model load

|

||||

if not TEMP_PATH.joinpath("emb.txt").exists():

|

||||

write_to_temp_file('emb.txt', [])

|

||||

@@ -202,7 +233,16 @@ if WILDCARD_EXT_PATHS is not None:

|

||||

if EMB_PATH.exists():

|

||||

# Get embeddings after the model loaded callback

|

||||

script_callbacks.on_model_loaded(get_embeddings)

|

||||

|

||||

|

||||

if HYP_PATH.exists():

|

||||

hypernets = get_hypernetworks()

|

||||

if hypernets:

|

||||

write_to_temp_file('hyp.txt', hypernets)

|

||||

|

||||

if LORA_PATH is not None and LORA_PATH.exists():

|

||||

lora = get_lora()

|

||||

if lora:

|

||||

write_to_temp_file('lora.txt', lora)

|

||||

|

||||

# Register autocomplete options

|

||||

def on_ui_settings():

|

||||

@@ -218,12 +258,15 @@ def on_ui_settings():

|

||||

shared.opts.add_option("tac_activeIn.modelList", shared.OptionInfo("", "List of model names (with file extension) or their hashes to use as black/whitelist, separated by commas.", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_activeIn.modelListMode", shared.OptionInfo("Blacklist", "Mode to use for model list", gr.Dropdown, lambda: {"choices": ["Blacklist","Whitelist"]}, section=TAC_SECTION))

|

||||

# Results related settings

|

||||

shared.opts.add_option("tac_slidingPopup", shared.OptionInfo(True, "Move completion popup together with text cursor", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_maxResults", shared.OptionInfo(5, "Maximum results", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_showAllResults", shared.OptionInfo(False, "Show all results", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_resultStepLength", shared.OptionInfo(100, "How many results to load at once", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_delayTime", shared.OptionInfo(100, "Time in ms to wait before triggering completion again (Requires restart)", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_useWildcards", shared.OptionInfo(True, "Search for wildcards", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_useEmbeddings", shared.OptionInfo(True, "Search for embeddings", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_useHypernetworks", shared.OptionInfo(True, "Search for hypernetworks", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_useLoras", shared.OptionInfo(True, "Search for Loras", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_showWikiLinks", shared.OptionInfo(False, "Show '?' next to tags, linking to its Danbooru or e621 wiki page (Warning: This is an external site and very likely contains NSFW examples!)", section=TAC_SECTION))

|

||||

# Insertion related settings

|

||||

shared.opts.add_option("tac_replaceUnderscores", shared.OptionInfo(True, "Replace underscores with spaces on insertion", section=TAC_SECTION))

|

||||

@@ -237,7 +280,43 @@ def on_ui_settings():

|

||||

shared.opts.add_option("tac_translation.oldFormat", shared.OptionInfo(False, "Translation file uses old 3-column translation format instead of the new 2-column one", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_translation.searchByTranslation", shared.OptionInfo(True, "Search by translation", section=TAC_SECTION))

|

||||

# Extra file settings

|

||||

shared.opts.add_option("tac_extra.extraFile", shared.OptionInfo("None", "Extra filename (do not use e621.csv here!)", gr.Dropdown, lambda: {"choices": csv_files_withnone}, refresh=update_tag_files, section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_extra.onlyAliasExtraFile", shared.OptionInfo(False, "Extra file in alias only format", section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_extra.extraFile", shared.OptionInfo("extra-quality-tags.csv", "Extra filename (for small sets of custom tags)", gr.Dropdown, lambda: {"choices": csv_files_withnone}, refresh=update_tag_files, section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_extra.addMode", shared.OptionInfo("Insert before", "Mode to add the extra tags to the main tag list", gr.Dropdown, lambda: {"choices": ["Insert before","Insert after"]}, section=TAC_SECTION))

|

||||

# Custom mappings

|

||||

shared.opts.add_option("tac_keymap", shared.OptionInfo(

|

||||

"""{

|

||||

"MoveUp": "ArrowUp",

|

||||

"MoveDown": "ArrowDown",

|

||||

"JumpUp": "PageUp",

|

||||

"JumpDown": "PageDown",

|

||||

"JumpToStart": "Home",

|

||||

"JumpToEnd": "End",

|

||||

"ChooseSelected": "Enter",

|

||||

"ChooseFirstOrSelected": "Tab",

|

||||

"Close": "Escape"

|

||||

}""", """Configure Hotkeys. For possible values, see https://www.w3.org/TR/uievents-key, or leave empty / set to 'None' to disable. Must be valid JSON.""", gr.Code, lambda: {"language": "json", "interactive": True}, section=TAC_SECTION))

|

||||

shared.opts.add_option("tac_colormap", shared.OptionInfo(

|

||||

"""{

|

||||

"danbooru": {