mirror of

https://github.com/DominikDoom/a1111-sd-webui-tagcomplete.git

synced 2026-01-27 03:29:55 +00:00

Compare commits

187 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

119a3ad51f | ||

|

|

c820a22149 | ||

|

|

eb1e1820f9 | ||

|

|

ef59cff651 | ||

|

|

a454383c43 | ||

|

|

bec567fe26 | ||

|

|

d4041096c9 | ||

|

|

0903259ddf | ||

|

|

f3e64b1fa5 | ||

|

|

312cec5d71 | ||

|

|

b71e6339bd | ||

|

|

7ddbc3c0b2 | ||

|

|

4c2ef8f770 | ||

|

|

97c5e4f53c | ||

|

|

1d8d9f64b5 | ||

|

|

7437850600 | ||

|

|

829a4a7b89 | ||

|

|

22472ac8ad | ||

|

|

5f77fa26d3 | ||

|

|

f810b2dd8f | ||

|

|

08d3436f3b | ||

|

|

afa13306ef | ||

|

|

95200e82e1 | ||

|

|

a63ce64f4e | ||

|

|

a966be7546 | ||

|

|

d37e37acfa | ||

|

|

342fbc9041 | ||

|

|

d496569c9a | ||

|

|

7778142520 | ||

|

|

cde90c13c4 | ||

|

|

231b121fe0 | ||

|

|

c659ed2155 | ||

|

|

0a4c17cada | ||

|

|

6e65811d4a | ||

|

|

03673c060e | ||

|

|

1c11c4ad5a | ||

|

|

30c9593d3d | ||

|

|

f840586b6b | ||

|

|

886704e351 | ||

|

|

41626d22c3 | ||

|

|

57076060df | ||

|

|

5ef346cde3 | ||

|

|

edf76d9df2 | ||

|

|

837dc39811 | ||

|

|

f1870b7e87 | ||

|

|

20b6635a2a | ||

|

|

1fe8f26670 | ||

|

|

e82e958c3e | ||

|

|

2dd48eab79 | ||

|

|

4df90f5c95 | ||

|

|

a156214a48 | ||

|

|

15478e73b5 | ||

|

|

fcacf7dd66 | ||

|

|

82f819f336 | ||

|

|

effda54526 | ||

|

|

434301738a | ||

|

|

58804796f0 | ||

|

|

668ca800b8 | ||

|

|

a7233a594f | ||

|

|

4fba7baa69 | ||

|

|

5ebe22ddfc | ||

|

|

44c5450b28 | ||

|

|

5fd48f53de | ||

|

|

7128efc4f4 | ||

|

|

bd0ddfbb24 | ||

|

|

3108daf0e8 | ||

|

|

446ac14e7f | ||

|

|

363895494b | ||

|

|

04551a8132 | ||

|

|

ffc0e378d3 | ||

|

|

440f109f1f | ||

|

|

80fb247dbe | ||

|

|

b3e71e840d | ||

|

|

998514bebb | ||

|

|

d7e98200a8 | ||

|

|

ac790c8ede | ||

|

|

22365ec8d6 | ||

|

|

030a83aa4d | ||

|

|

460d32a4ed | ||

|

|

581bf1e6a4 | ||

|

|

74ea5493e5 | ||

|

|

94ec8884c3 | ||

|

|

6cf9acd6ab | ||

|

|

109a8a155e | ||

|

|

3caa1b51ed | ||

|

|

b44c36425a | ||

|

|

1e81403180 | ||

|

|

0f487a5c5c | ||

|

|

2baa12fea3 | ||

|

|

1a9157fe6e | ||

|

|

67eeb5fbf6 | ||

|

|

5911248ab9 | ||

|

|

1c693c0263 | ||

|

|

11ffed8afc | ||

|

|

cb54b66eda | ||

|

|

92a937ad01 | ||

|

|

ba9dce8d90 | ||

|

|

2622e1b596 | ||

|

|

b03b1a0211 | ||

|

|

3e33169a3a | ||

|

|

d8d991531a | ||

|

|

f626b9453d | ||

|

|

5067afeee9 | ||

|

|

018c6c8198 | ||

|

|

2846d79b7d | ||

|

|

783a847978 | ||

|

|

44effca702 | ||

|

|

475ef59197 | ||

|

|

3953260485 | ||

|

|

0a8e7d7d84 | ||

|

|

46d07d703a | ||

|

|

bd1dbe92c2 | ||

|

|

66fa745d6f | ||

|

|

37b5dca66e | ||

|

|

5db035cc3a | ||

|

|

90cf3147fd | ||

|

|

4d4f23e551 | ||

|

|

80b47c61bb | ||

|

|

57821aae6a | ||

|

|

e23bb6d4ea | ||

|

|

d4cca00575 | ||

|

|

86ea94a565 | ||

|

|

53f46c91a2 | ||

|

|

e5f93188c3 | ||

|

|

3e57842ac6 | ||

|

|

32c4589df3 | ||

|

|

5bbd97588c | ||

|

|

b2a663f7a7 | ||

|

|

6f93d19a2b | ||

|

|

79bab04fd2 | ||

|

|

5b69d1e622 | ||

|

|

651cf5fb46 | ||

|

|

5deb72cddf | ||

|

|

97ebe78205 | ||

|

|

b937e853c9 | ||

|

|

f63bbf947f | ||

|

|

16bc6d8868 | ||

|

|

ebe276ee44 | ||

|

|

995a5ecdba | ||

|

|

90d144a5f4 | ||

|

|

14a4440c33 | ||

|

|

cdf092f3ac | ||

|

|

e1598378dc | ||

|

|

599ad7f95f | ||

|

|

0b2bb138ee | ||

|

|

4a415f1a04 | ||

|

|

21de5fe003 | ||

|

|

a020df91b2 | ||

|

|

0260765b27 | ||

|

|

638c073f37 | ||

|

|

d11b53083b | ||

|

|

571072eea4 | ||

|

|

acfdbf1ed4 | ||

|

|

2e271aea5c | ||

|

|

b28497764f | ||

|

|

0d9d5f1e44 | ||

|

|

de3380818e | ||

|

|

acb85d7bb1 | ||

|

|

39ea33be9f | ||

|

|

1cac893e63 | ||

|

|

94823b871c | ||

|

|

599ff8a6f2 | ||

|

|

6893113e0b | ||

|

|

ed89d0e7e5 | ||

|

|

e47c14ab5e | ||

|

|

2ae512c8be | ||

|

|

4a427e309b | ||

|

|

510ee66b92 | ||

|

|

c41372143d | ||

|

|

f1d911834b | ||

|

|

40d9fc1079 | ||

|

|

88fa4398c8 | ||

|

|

3496fa58d9 | ||

|

|

737b697357 | ||

|

|

8523d7e9b5 | ||

|

|

77c0970500 | ||

|

|

707202ed71 | ||

|

|

922414b4ba | ||

|

|

7f18856321 | ||

|

|

cc1f35fc68 | ||

|

|

ae0f80ab0e | ||

|

|

8a436decf2 | ||

|

|

7357ccb347 | ||

|

|

d7075c5468 | ||

|

|

4923c8a177 | ||

|

|

9632909f72 | ||

|

|

7be3066d77 |

1

.gitignore

vendored

1

.gitignore

vendored

@@ -1,2 +1,3 @@

|

||||

tags/temp/

|

||||

__pycache__/

|

||||

tags/tag_frequency.db

|

||||

|

||||

111

README.md

111

README.md

@@ -20,6 +20,10 @@ Booru style tag autocompletion for the AUTOMATIC1111 Stable Diffusion WebUI

|

||||

</div>

|

||||

<br/>

|

||||

|

||||

#### ⚠️ Notice:

|

||||

I am currently looking for feedback on a new feature I'm working on and want to release soon.<br/>

|

||||

Please check [the announcement post](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/discussions/270) for more info if you are interested to help.

|

||||

|

||||

# 📄 Description

|

||||

|

||||

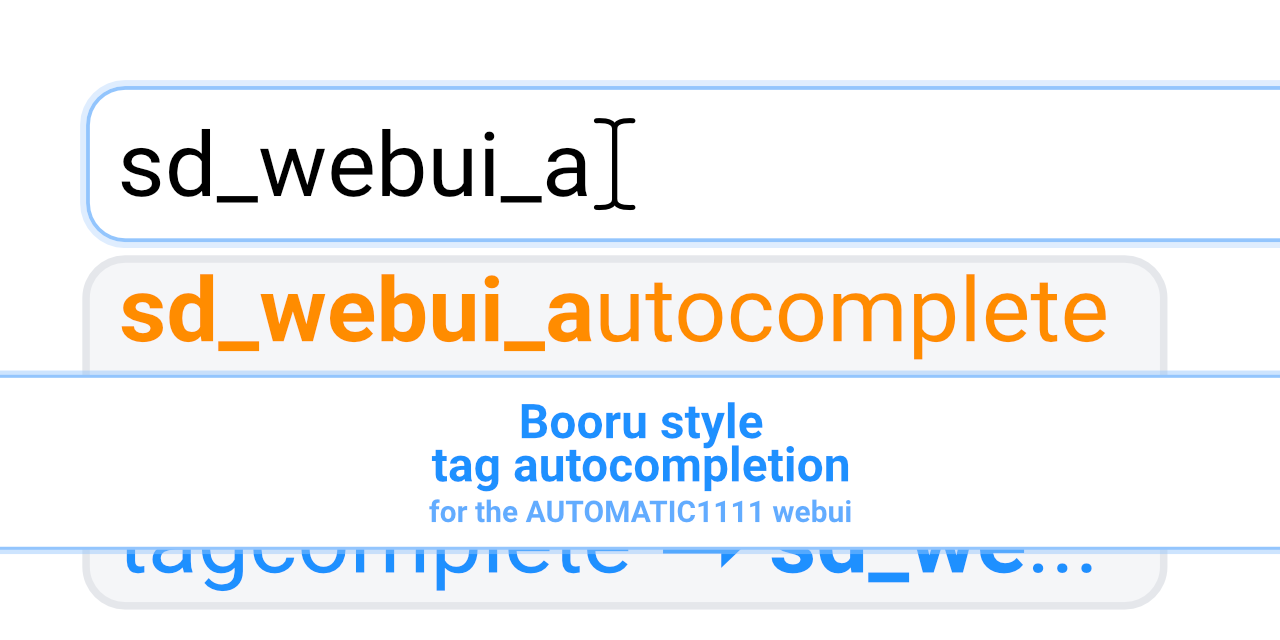

Tag Autocomplete is an extension for the popular [AUTOMATIC1111 web UI](https://github.com/AUTOMATIC1111/stable-diffusion-webui) for Stable Diffusion.

|

||||

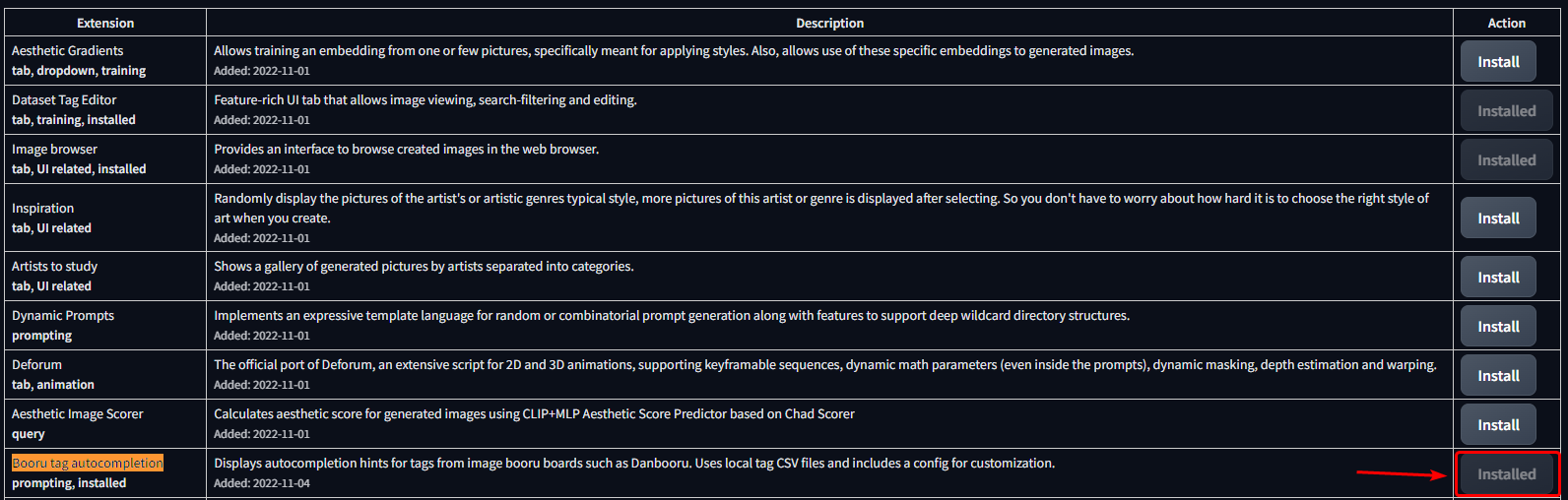

@@ -42,7 +46,7 @@ You can install it using the inbuilt available extensions list, clone the files

|

||||

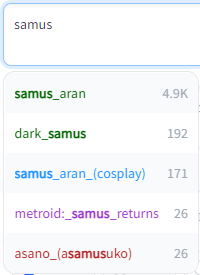

Tag autocomplete supports built-in completion for:

|

||||

- 🏷️ **Danbooru & e621 tags** (Top 100k by post count, as of November 2022)

|

||||

- ✳️ [**Wildcards**](#wildcards)

|

||||

- ➕ [**Extra network**](#extra-networks-embeddings-hypernets-lora) filenames, including

|

||||

- ➕ [**Extra network**](#extra-networks-embeddings-hypernets-lora-) filenames, including

|

||||

- Textual Inversion embeddings [(jump to readme section)]

|

||||

- Hypernetworks

|

||||

- LoRA

|

||||

@@ -74,6 +78,10 @@ Wildcard script support:

|

||||

|

||||

https://user-images.githubusercontent.com/34448969/200128031-22dd7c33-71d1-464f-ae36-5f6c8fd49df0.mp4

|

||||

|

||||

Extra Network preview support:

|

||||

|

||||

https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/assets/34448969/3c0cad84-fb5f-436d-b05a-28db35860d13

|

||||

|

||||

Dark and Light mode supported, including tag colors:

|

||||

|

||||

|

||||

@@ -123,6 +131,49 @@ Completion for these types is triggered by typing `<`. By default it will show t

|

||||

- Or `<lora:` and `<lyco:` respectively for the long form

|

||||

- `<h:` or `<hypernet:` will only show Hypernetworks

|

||||

|

||||

### Live previews

|

||||

Tag Autocomplete will now also show the preview images used for the cards in the Extra Networks menu in a small window next to the regular popup.

|

||||

This enables quick comparisons and additional info for unclear filenames without having to stop typing to look it up in the webui menu.

|

||||

It works for all supported extra network types that use preview images (Loras/Lycos, Embeddings & Hypernetworks). The preview window will stay hidden for normal tags or if no preview was found.

|

||||

|

||||

|

||||

|

||||

### Lora / Lyco trigger word completion

|

||||

This feature will try to add known trigger words on autocompleting a Lora/Lyco.

|

||||

|

||||

It primarily uses the list provided by the [model-keyword](https://github.com/mix1009/model-keyword/) extension, which thus needs to be installed to use this feature. The list is also regularly updated through it.

|

||||

However, once installed, you can deactivate it if you want, since tag autocomplete only needs the local keyword lists it ships with, not the extension itself.

|

||||

The used files are `lora-keyword.txt` and `lora-keyword-user.txt` in the model-keyword installation folder.

|

||||

If the main file isn't found, the feature will simply deactivate itself, everything else should work normally.

|

||||

|

||||

#### Note:

|

||||

As of [v1.5.0](https://github.com/AUTOMATIC1111/stable-diffusion-webui/commit/a3ddf464a2ed24c999f67ddfef7969f8291567be), the webui provides a native method to add activation keywords for Lora through the Extra networks config UI.

|

||||

These trigger words will always be preferred over the model-keyword ones and can be used without needing to install the model-keyword extension. This will however, obviously, be limited to those manually added keywords. For automatic discovery of keywords, you will still need the big list provided by model-keyword.

|

||||

|

||||

Custom trigger words can be added through two methods:

|

||||

1. Using the extra networks UI (recommended):

|

||||

- Only works with webui version v1.5.0 upwards, but much easier to use and works without the model-keyword extension

|

||||

- This method requires no manual refresh

|

||||

- <details>

|

||||

<summary>Image example</summary>

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

2. Through the model-keyword UI:

|

||||

- One issue with this method is that it has no official support for the Lycoris extension and doesn't scan its folder for files, so to add them through the UI you will have to temporarily move them into the Lora model folder to be able to select them in model-keywords dropdown. Some are already included in the default list though, so trying it out first is advisable.

|

||||

- After having added your custom keywords, you will need to either restart the UI or use the "Refresh TAC temp files" setting button.

|

||||

- <details>

|

||||

<summary>Image example</summary>

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

Sometimes the inserted keywords can be wrong due to a hash collision, however model-keyword and tag autocomplete take the name of the file into account too if the collision is known.

|

||||

|

||||

If it still inserts something wrong or you simply don't want the keywords added that time, you can undo / redo it directly after as often as you want, until you type something else

|

||||

(It uses the default undo/redo action of the browser, so <kbd>CTRL</kbd> + <kbd>Z</kbd>, context menu and mouse macros should all work).

|

||||

|

||||

### Embedding type filtering

|

||||

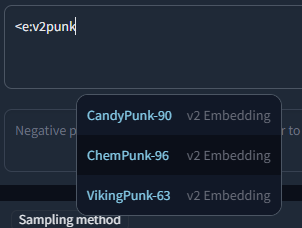

Embeddings trained for Stable Diffusion 1.x or 2.x models respectively are incompatible with the other type. To make it easier to find valid embeds, they are categorized by "v1 Embedding" and "v2 Embedding", including a slight color difference. You can also filter your search to include only v1 or v2 embeddings by typing `<v1/2` or `<e:v1/2` followed by the actual search term.

|

||||

|

||||

@@ -271,6 +322,14 @@ If this option is turned on, it will show a `?` link next to the tag. Clicking t

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Wiki links -->

|

||||

<details>

|

||||

<summary>Extra network live previews</summary>

|

||||

|

||||

This option enables a small preview window alongside the normal completion popup that will show the card preview also usd in the extra networks tab for that file.

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Insertion -->

|

||||

<details>

|

||||

<summary>Completion settings</summary>

|

||||

@@ -285,6 +344,49 @@ Depending on the last setting, tag autocomplete will append a comma and space af

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Lora keywords -->

|

||||

<details>

|

||||

<summary>Lora / Lyco trigger word insertion</summary>

|

||||

|

||||

See [the detailed readme section](#lora--lyco-trigger-word-completion) for more info.

|

||||

|

||||

Selects the mode to use for Lora / Lyco trigger word insertion.

|

||||

Needs the [model-keyword](https://github.com/mix1009/model-keyword/) extension to be installed, else it will do nothing.

|

||||

|

||||

- Never

|

||||

- Will not complete trigger words, even if the model-keyword extension is installed

|

||||

- Only user list

|

||||

- Will only load the custom keywords specified in the lora-keyword-user.txt file and ignore the default list

|

||||

- Always

|

||||

- Will load and use both lists

|

||||

|

||||

Switching from "Never" to what you had before or back will not require a restart, but changing between the full and user only list will.

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Wildcard path mode -->

|

||||

<details>

|

||||

<summary>Wildcard path completion</summary>

|

||||

|

||||

Some collections of wildcards are organized in nested subfolders.

|

||||

They are listed with the full path to the file, like "hair/colors/light/..." or "clothing/male/casual/..." etc.

|

||||

|

||||

In these cases it is often hard to type the full path manually, but you still want to narrow the selection before further scrolling in the list.

|

||||

For this, a partial completion of the path can be triggered with <kbd>Tab</kbd> (or the custom hotkey for `ChooseSelectedOrFirst`) if the results to choose from are all paths.

|

||||

|

||||

This setting determines the mode it should use for completion:

|

||||

- To next folder level:

|

||||

- Completes until the next `/` in the path, preferring your selection as the way forward

|

||||

- If you want to directly choose an option, <kbd>Enter</kbd> / the `ChooseSelected` hotkey will skip it and fully complete.

|

||||

- To first difference:

|

||||

- Completes until the first difference in the results and waits for additional info

|

||||

- E.g. if you have "/sub/folder_a/..." and "/sub/folder_b/...", completing after typing "su" will insert everything up to "/sub/folder_" and stop there until you type a or b to clarify.

|

||||

- If you selected something with the arrow keys (regardless of pressing Enter or Tab), it will skip it and fully complete.

|

||||

- Always fully:

|

||||

- As the name suggests, disables the partial completion behavior and inserts the full path under all circumstances like with normal tags.

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Alias -->

|

||||

<details>

|

||||

<summary>Alias settings</summary>

|

||||

@@ -376,7 +478,9 @@ You can also add this to your quicksettings bar to have the refresh button avail

|

||||

|

||||

# Translations

|

||||

An additional file can be added in the translation section, which will be used to translate both tags and aliases and also enables searching by translation.

|

||||

This file needs to be a CSV in the format `<English tag/alias>,<Translation>`, but for backwards compatibility with older files that used a three column format, you can turn on `Translation file uses old 3-column translation format instead of the new 2-column one` to support them. In that case, the second column will be unused and skipped during parsing.

|

||||

This file needs to be a CSV in the format `<English tag/alias>,<Translation>`. Some older files use a three column format, which requires a compatibility setting to be activated.

|

||||

You can find it under `Settings > Tag autocomplete > Translation filename > Translation file uses old 3-column translation format instead of the new 2-column one`.

|

||||

With it on, the second column will be unused and skipped during parsing.

|

||||

|

||||

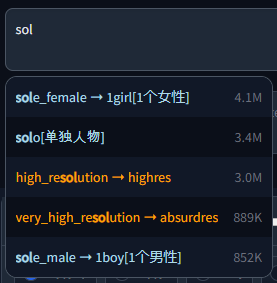

Example with Chinese translation:

|

||||

|

||||

@@ -386,6 +490,7 @@ Example with Chinese translation:

|

||||

## List of translations

|

||||

- [🇨🇳 Chinese tags](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/discussions/23) by @HalfMAI, using machine translation and manual correction for the most common tags (uses legacy format)

|

||||

- [🇨🇳 Chinese tags](https://github.com/sgmklp/tag-for-autocompletion-with-translation) by @sgmklp, smaller set of manual translations based on https://github.com/zcyzcy88/TagTable

|

||||

- [🇯🇵 Japanese tags](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/discussions/265) by @applemango, both machine and human translations available

|

||||

|

||||

> ### 🫵 I need your help!

|

||||

> Translations are a community effort. If you have translated a tag file or want to create one, please open a Pull Request or Issue so your link can be added here.

|

||||

@@ -495,4 +600,4 @@ to force-reload the site without cache if e.g. a new feature doesn't appear for

|

||||

[multidiffusion-url]: https://github.com/pkuliyi2015/multidiffusion-upscaler-for-automatic1111

|

||||

[tag-editor-url]: https://github.com/toshiaki1729/stable-diffusion-webui-dataset-tag-editor

|

||||

[wd-tagger-url]: https://github.com/toriato/stable-diffusion-webui-wd14-tagger

|

||||

[umi-url]: https://github.com/Klokinator/Umi-AI

|

||||

[umi-url]: https://github.com/Klokinator/Umi-AI

|

||||

|

||||

429

README_JA.md

429

README_JA.md

@@ -1,21 +1,67 @@

|

||||

|

||||

|

||||

# Booru tag autocompletion for A1111

|

||||

<div align="center">

|

||||

|

||||

[](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/releases)

|

||||

# SD WebUI Tag Autocomplete

|

||||

## [English Document](./README.md), [中文文档](./README_ZH.md), 日本語

|

||||

|

||||

## [English Document](./README.md), [中文文档](./README_ZH.md)

|

||||

Booruスタイルタグを自動補完するためのAUTOMATIC1111 Stable Diffusion WebUI用拡張機能

|

||||

|

||||

このカスタムスクリプトは、Stable Diffusion向けの人気のweb UIである、[AUTOMATIC1111 web UI](https://github.com/AUTOMATIC1111/stable-diffusion-webui)の拡張機能として利用できます。

|

||||

[![Github Release][release-shield]][release-url]

|

||||

[![stargazers][stargazers-shield]][stargazers-url]

|

||||

[![contributors][contributors-shield]][contributors-url]

|

||||

[![forks][forks-shield]][forks-url]

|

||||

[![issues][issues-shield]][issues-url]

|

||||

|

||||

[変更内容][release-url] •

|

||||

[確認されている問題](#%EF%B8%8F-よくある問題また現在確認されている問題) •

|

||||

[バグを報告する][issues-url] •

|

||||

[機能追加に関する要望][issues-url]

|

||||

</div>

|

||||

<br/>

|

||||

|

||||

# 📄 説明

|

||||

|

||||

Tag AutocompleteはStable Diffusion向けの人気のweb UIである、[AUTOMATIC1111 web UI](https://github.com/AUTOMATIC1111/stable-diffusion-webui)の拡張機能として利用できます。

|

||||

|

||||

主にアニメ系イラストを閲覧するための掲示板「Danbooru」などで利用されているタグの自動補完ヒントを表示するための拡張機能となります。

|

||||

[Waifu Diffusion](https://github.com/harubaru/waifu-diffusion)など、この情報を使って学習させたStable Diffusionモデルもあるため、正確なタグをプロンプトに使用することで、構図を改善し、思い通りの画像が生成できるようになります。

|

||||

例えば[Waifu Diffusion](https://github.com/harubaru/waifu-diffusion)やNAIから派生した多くのモデルやマージなど、Stable Diffusionモデルの中にはこの情報を使って学習されたものもあるため、プロンプトに正確なタグを使用することで、多くのケースで構図を改善した思い通りの画像が生成できるようになります。

|

||||

|

||||

web UIに内蔵されている利用可能な拡張機能リストを使用してインストールするか、[以下の説明](#インストール)に従ってファイルを手動でcloneするか、または[リリース](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/releases)からパッケージ化されたバージョンを使用することができます。

|

||||

組み込みの利用可能な拡張機能リストを使ってインストールしたり、[下記](#-インストール)の説明に従って手動でファイルをcloneしたり、[Releases](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/releases)にあるパッケージ済みのバージョンを使うことができます。

|

||||

|

||||

## よく発生する問題 & 発見されている課題:

|

||||

- ブラウザの設定によっては、古いバージョンのスクリプトがキャッシュされていることがあります。アップデート後に新機能が表示されない場合などには、`CTRL+F5`でキャッシュを利用せずにサイトを強制的にリロードしてみてください。

|

||||

- プロンプトのポップアップのスタイルが崩れていたり、全く表示されない場合([このような場合](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/assets/34448969/7bbfdd54-fc23-4bfc-85af-24704b139b3a))、openpose-editor拡張機能をインストールしている場合は、必ずアップデートしてください。古いバージョンでは、他の拡張機能との間で問題が発生することが知られています。

|

||||

<br/>

|

||||

|

||||

# ✨ Features

|

||||

- 🚀 タイピング中に補完のためのヒントを表示 (通常時)

|

||||

- ⌨️ キーボードナビゲーション

|

||||

- 🌒 ダーク&ライトモードのサポート

|

||||

- 🛠️ 多くの[設定](#%EF%B8%8F-設定)とカスタマイズ性を提供

|

||||

- 🌍 [翻訳サポート](#翻訳)タグ、オプションでプロンプトのライブ プレビュー付き

|

||||

- 私が知っている翻訳のリストは[こちら](#翻訳リスト)を参照してください。

|

||||

|

||||

タグの自動補完は組み込まれている補完内容をサポートしています:

|

||||

- 🏷️ **Danbooru & e621 tags** (投稿数上位100k、2022年11月現在)

|

||||

- ✳️ [**ワイルドカード**](#ワイルドカード)

|

||||

- ➕ [**Extra networks**](#extra-networks-embeddings-hypernets-lora-) filenames, including

|

||||

- Textual Inversion embeddings

|

||||

- Hypernetworks

|

||||

- LoRA

|

||||

- LyCORIS / LoHA

|

||||

- 🪄 [**Chants(詠唱)**](#chants詠唱) (長いプロンプトプリセット用のカスタムフォーマット)

|

||||

- 🏷️ "[**Extra file**](#extra-file)", カスタマイズ可能なextra tagsセット

|

||||

|

||||

|

||||

さらに、サードパーティの拡張機能にも対応しています:

|

||||

<details>

|

||||

<summary>クリックして開く</summary>

|

||||

|

||||

- [Image Browser][image-browser-url] - ファイル名とEXIFキーワードによる検索

|

||||

- [Multidiffusion Upscaler][multidiffusion-url] - 地域別のプロンプト

|

||||

- [Dataset Tag Editor][tag-editor-url] - キャプション, 結果の確認, タグの編集 & キャプションの編集

|

||||

- [WD 1.4 Tagger][wd-tagger-url] - 追加と除外タグ

|

||||

- [Umi AI][umi-url] - YAMLワイルドカードの補完

|

||||

</details>

|

||||

<br/>

|

||||

|

||||

## スクリーンショット & デモ動画

|

||||

<details>

|

||||

@@ -34,8 +80,8 @@ https://user-images.githubusercontent.com/34448969/200128031-22dd7c33-71d1-464f-

|

||||

|

||||

</details>

|

||||

|

||||

## インストール

|

||||

### 内蔵されている拡張機能リストを用いた方法

|

||||

# 📦 インストール

|

||||

## 内蔵されている拡張機能リストを用いた方法

|

||||

1. Extensions タブを開く

|

||||

2. Available タブを開く

|

||||

3. "Load from:" をクリック

|

||||

@@ -48,14 +94,14 @@ https://user-images.githubusercontent.com/34448969/200128031-22dd7c33-71d1-464f-

|

||||

|

||||

|

||||

|

||||

### 手動でcloneする方法

|

||||

## 手動でcloneする方法

|

||||

```bash

|

||||

git clone "https://github.com/DominikDoom/a1111-sd-webui-tagcomplete.git" extensions/tag-autocomplete

|

||||

```

|

||||

(第2引数でフォルダ名を指定可能なので、好きな名前を指定しても良いでしょう)

|

||||

|

||||

## 追加で有効化できる補完機能

|

||||

### ワイルドカード

|

||||

# ❇️ 追加で有効化できる補完機能

|

||||

## ワイルドカード

|

||||

|

||||

自動補完は、https://github.com/AUTOMATIC1111/stable-diffusion-webui-wildcards 、または他の類似のスクリプト/拡張機能で使用されるワイルドカードファイルでも利用可能です。補完は `__` (ダブルアンダースコア) と入力することで開始されます。最初にワイルドカードファイルのリストが表示され、1つを選択すると、そのファイル内の置換オプションが表示されます。

|

||||

これにより、スクリプトによって置換されるカテゴリを挿入するか、または直接1つを選択して、ワイルドカードをカテゴリ化されたカスタムタグシステムのようなものとして使用することができます。

|

||||

@@ -66,20 +112,21 @@ git clone "https://github.com/DominikDoom/a1111-sd-webui-tagcomplete.git" extens

|

||||

|

||||

ワイルドカードはすべての拡張機能フォルダと、古いバージョンをサポートするための `scripts/wildcards` フォルダで検索されます。これは複数の拡張機能からワイルドカードを組み合わせることができることを意味しています。ワイルドカードをグループ化した場合、ネストされたフォルダもサポートされます。

|

||||

|

||||

### Embeddings, Lora & Hypernets

|

||||

## Extra networks (Embeddings, Hypernets, LoRA, ...)

|

||||

これら3つのタイプの補完は、`<`と入力することで行われます。デフォルトでは3つとも混在して表示されますが、以下の方法でさらにフィルタリングを行うことができます:

|

||||

- `<e:` は、embeddingsのみを表示します。

|

||||

- `<l:` 、または `<lora:` はLoraのみを表示します。

|

||||

- `<l:` は、LoRAとLyCORISのみを表示します。

|

||||

- または `<lora:` と `<lyco:` で入力することも可能です

|

||||

- `<h:` 、または `<hypernet:` はHypernetworksのみを表示します

|

||||

|

||||

#### Embedding type filtering

|

||||

### Embedding type filtering

|

||||

Stable Diffusion 1.xまたは2.xモデル用にそれぞれトレーニングされたembeddingsは、他のタイプとの互換性がありません。有効なembeddingsを見つけやすくするため、若干の色の違いも含めて「v1 Embedding」と「v2 Embedding」で分類しています。また、`<v1/2`または`<e:v1/2`に続けて実際の検索のためのキーワードを入力すると、v1またはv2embeddingsのみを含むように検索を絞り込むことができます。

|

||||

|

||||

例:

|

||||

|

||||

|

||||

|

||||

### Chants(詠唱)

|

||||

## Chants(詠唱)

|

||||

Chants(詠唱)は、より長いプロンプトプリセットです。この名前は、中国のユーザーによる初期のプロンプト集からヒントを得たもので、しばしば「呪文書」(原文は「Spellbook」「Codex」)などと呼ばれていました。

|

||||

このような文書から得られるプロンプトのスニペットは、このような理由から呪文や詠唱と呼ばれるにふさわしいものでした。

|

||||

|

||||

@@ -89,8 +136,11 @@ EmbeddingsやLoraと同様に、この機能は `<`, `<c:`, `<chant:` コマン

|

||||

(masterpiece, best quality, high quality, highres, ultra-detailed),

|

||||

```

|

||||

|

||||

|

||||

Chants(詠唱)は、以下のフォーマットに従ってJSONファイルで追加することができます::

|

||||

|

||||

<details>

|

||||

<summary>Chant format (click to expand)</summary>

|

||||

|

||||

```json

|

||||

[

|

||||

{

|

||||

@@ -113,6 +163,10 @@ Chants(詠唱)は、以下のフォーマットに従ってJSONファイル

|

||||

}

|

||||

]

|

||||

```

|

||||

|

||||

</details>

|

||||

<br/>

|

||||

|

||||

このファイルが拡張機能の `tags` フォルダ内にある場合、settings内の"Chant file"ドロップダウンから選択することができます。

|

||||

|

||||

chantオブジェクトは4つのフィールドを持ちます:

|

||||

@@ -121,7 +175,7 @@ chantオブジェクトは4つのフィールドを持ちます:

|

||||

- `content` - 実際に挿入されるプロンプト

|

||||

- `color` - 表示される色。通常のタグと同じカテゴリーカラーシステムを使用しています。

|

||||

|

||||

### Umi AI tags

|

||||

## Umi AI tags

|

||||

https://github.com/Klokinator/Umi-AI は、Unprompted や Dynamic Wildcards に似た、機能豊富なワイルドカード拡張です。

|

||||

例えば `<[preset][--female][sfw][species]>` はプリセットカテゴリーを選び、女性関連のタグを除外し、さらに次のカテゴリーで絞り込み、実行時にこれらすべての条件に一致するランダムなフィルインを1つ選び出します。補完は `<[`] とそれに続く新しい開く括弧、例えば `<[xyz][`] で始まり、 `>` で閉じるまで続きます。

|

||||

|

||||

@@ -130,78 +184,264 @@ https://github.com/Klokinator/Umi-AI は、Unprompted や Dynamic Wildcards に

|

||||

|

||||

ほとんどの功績は[@ctwrs](https://github.com/ctwrs)によるものです。この方はUmiの開発者の一人として多くの貢献をしています。

|

||||

|

||||

## Settings

|

||||

# 🛠️ 設定

|

||||

|

||||

この拡張機能には、大量の設定&カスタマイズ性が組み込まれています:

|

||||

この拡張機能には多くの設定とカスタマイズ機能が組み込まれています。ほとんどのことははっきりしていますが、詳細な説明は以下のセクションをクリックしてください。

|

||||

|

||||

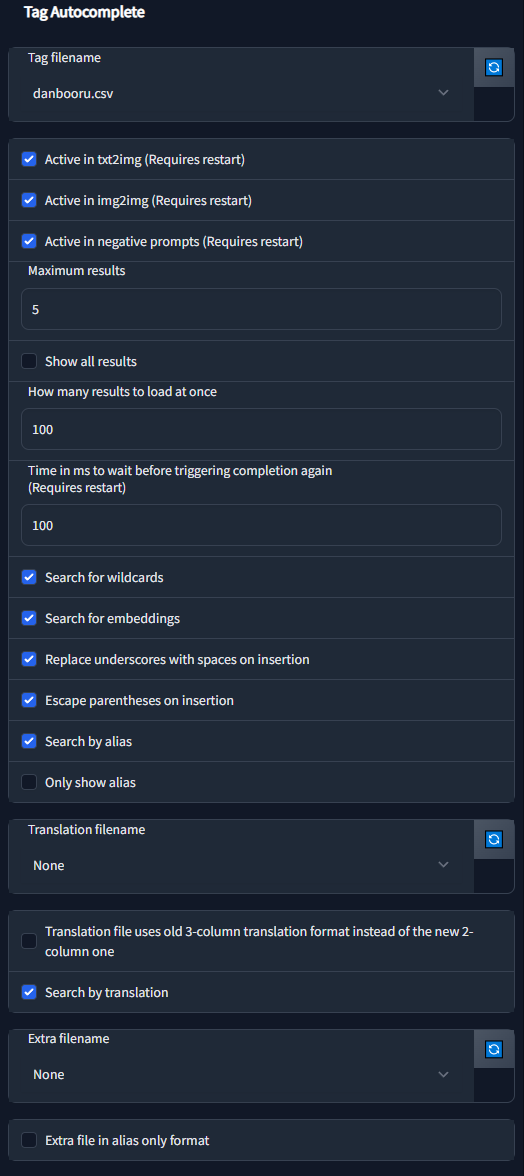

|

||||

<!-- Filename -->

|

||||

<details>

|

||||

<summary>Tag filename</summary>

|

||||

|

||||

| 設定項目 | 説明 |

|

||||

|---------|-------------|

|

||||

| tagFile | 使用するタグファイルを指定します。お好みのタグデータベースを用意することができますが、このスクリプトはDanbooruタグを想定して開発されているため、他の構成では正常に動作しない場合があります。|

|

||||

| activeIn | txt2img、img2img、またはその両方のネガティブプロンプトのスクリプトを有効、または無効にすることができます。 |

|

||||

| maxResults | 最大何件の結果を表示するか。デフォルトのタグセットでは、結果は出現回数順に表示されます。embeddingsとワイルドカードの場合は、スクロール可能なリストですべての結果を表示します。 |

|

||||

| resultStepLength | 長いリストやshowAllResultsがtrueの場合に、指定したサイズの小さなバッチで結果を読み込むことができるようにします。 |

|

||||

| delayTime | オートコンプリートを起動するまでの待ち時間をミリ秒単位で指定できます。これは入力中に頻繁に補完内容が更新されるのを防ぐのに役立ちます。 |

|

||||

| showAllResults | trueの場合、maxResultsを無視し、すべての結果をスクロール可能なリストで表示します。**警告:** 長いリストの場合、ブラウザが遅くなることがあります。 |

|

||||

| replaceUnderscores | trueにした場合、タグをクリックしたときに `_`(アンダースコア)が ` `(スペース)に置き換えられます。モデルによっては便利になるかもしれません。 |

|

||||

| escapeParentheses | trueの場合、()を含むタグをエスケープして、Web UIのプロンプトの重み付け機能に影響を与えないようにします。 |

|

||||

| appendComma | UIスイッチ "Append commas"の開始される値を指定することができます。UIのオプションが無効の場合、常にこの値が使用されます。 |

|

||||

| useWildcards | ワイルドカード補完機能の切り替えに使用します。 |

|

||||

| useEmbeddings | embedding補完機能の切り替えに使用します。 |

|

||||

| alias | エイリアスに関するオプションです。詳しくは下のセクションをご覧ください。 |

|

||||

| translation | 翻訳用のオプションです。詳しくは下のセクションをご覧ください。 |

|

||||

| extras | タグファイル/エイリアス/翻訳を追加するためのオプションです。詳しくは下記をご覧ください。 |

|

||||

| chantFile | chants(長いプロンプト・プリセット/ショートカット)に使用するためファイルです。 |

|

||||

| keymap | カスタマイズ可能なhotkeyを設定するために利用します。 |

|

||||

| colors | タグの色をカスタマイズできます。詳しくは下記をご覧ください。 |

|

||||

### Colors

|

||||

タグタイプに関する色は、タグ自動補完設定のためのJSONコードを変更することで指定することができます。

|

||||

フォーマットは標準的なJSONで、オブジェクト名は、それらが使用されるタグのファイル名(.csvを除く)に対応しています。

|

||||

角括弧の中の最初の値はダークモード、2番目の値はライトモードです。色の名称と16進数、どちらも使えるはずです。

|

||||

スクリプトが使用するメインのタグファイルとなります。デフォルトでは `danbooru.csv` と `e621.csv` が含まれており、ここにカスタムタグを追加することもできますが、大半のモデルはこの2つ以外(主にdanbooru)では学習していないため、あまり意味はありません。

|

||||

|

||||

```json

|

||||

{

|

||||

"danbooru": {

|

||||

"-1": ["red", "maroon"],

|

||||

"0": ["lightblue", "dodgerblue"],

|

||||

"1": ["indianred", "firebrick"],

|

||||

"3": ["violet", "darkorchid"],

|

||||

"4": ["lightgreen", "darkgreen"],

|

||||

"5": ["orange", "darkorange"]

|

||||

},

|

||||

"e621": {

|

||||

"-1": ["red", "maroon"],

|

||||

"0": ["lightblue", "dodgerblue"],

|

||||

"1": ["gold", "goldenrod"],

|

||||

"3": ["violet", "darkorchid"],

|

||||

"4": ["lightgreen", "darkgreen"],

|

||||

"5": ["tomato", "darksalmon"],

|

||||

"6": ["red", "maroon"],

|

||||

"7": ["whitesmoke", "black"],

|

||||

"8": ["seagreen", "darkseagreen"]

|

||||

}

|

||||

}

|

||||

```

|

||||

また、カスタムタグファイルの新しいカラーセットを追加する際にも使用できます。

|

||||

数字はタグの種類を指定するもので、タグのソースに依存します。例として、[CSV tag data](#csv-tag-data)を参照してください。

|

||||

拡張機能の他の機能(ワイルドカードやLoRA補完など)を使いたいが、通常のタグには興味がない場合は、`None`に設定することも可能です。

|

||||

|

||||

### エイリアス, 翻訳 & Extra tagsについて

|

||||

#### エイリアス

|

||||

Booruのサイトのように、タグは1つまたは複数のエイリアスを持つことができ、完了時に実際の値へリダイレクトされて入力されます。これらは `config.json` の設定をもとに検索/表示されます:

|

||||

- `searchByAlias` - エイリアスも検索対象とするか、実際のタグのみを検索対象とするかを設定します

|

||||

- `onlyShowAlias` - `alias -> actual` の代わりに、エイリアスのみを表示します。表示のみで、最後に挿入されるテキストは実際のタグのままです。

|

||||

|

||||

</details>

|

||||

|

||||

#### 翻訳

|

||||

<!-- Active In -->

|

||||

<details>

|

||||

<summary>"Active in" の設定</summary>

|

||||

|

||||

タグのオートコンプリートがどこにアタッチされ、変更を受け付けるかを指定します。

|

||||

ネガティブプロンプトはtxt2imgとimg2imgの設定に従うので、"親 "がアクティブな場合にのみアクティブとなります。

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

<!-- Blacklist -->

|

||||

<details>

|

||||

<summary>Black / Whitelist</summary>

|

||||

|

||||

このオプションは、タグのオートコンプリートをグローバルにオフにすることができますが、特定のモデルに対してのみ有効または無効にしたい場合もあります。

|

||||

例えば、あなたのモデルのほとんどがアニメモデルである場合、boorタグでトレーニングされておらず、その恩恵を受けないフォトリアリスティックモデルをブラックリストに追加し、これらのモデルに切り替えた後に自動的に無効にすることができます。モデル名(拡張子を含む)とwebuiハッシュ(短い形式と長い形式の両方)の両方を使用できます。

|

||||

|

||||

`Blacklist`は指定したすべてのモデルを除外しますが、`Whitelist`はこれらのモデルに対してのみ有効で、デフォルトではオフのままです。例外として、空のホワイトリストは無視されます(空のブラックリストと同じです)。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Move Popup -->

|

||||

<details>

|

||||

<summary>カーソルで補完ポップアップを移動</summary>

|

||||

|

||||

このオプションは、IDEで行われるような、カーソルの位置に追従するフローティングポップアップを有効または無効にします。スクリプトはポップアップが右側でつぶれないように十分なスペースを確保しようとしますが、長いタグでは必ずしもうまくいきません。無効にした場合、ポップアップは左側に表示されます。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Results Count -->

|

||||

<details>

|

||||

<summary>結果の数</summary>

|

||||

|

||||

一度に表示する結果の量を設定できます。

|

||||

`Show all results`が有効な場合、`Maximum results`で指定された数で切り捨てられるのではなく、スクロール可能なリストが表示されます。パフォーマンス上の理由から、この場合はすべてを一度に読み込むのではなく、ブロック単位で読み込みます。ブロックの大きさは`How many results to load at once`によって決まります。一番下に到達すると、次のブロックがロードされます(しかし、そんなことはめったには起こらないと思います)。

|

||||

|

||||

特筆すべきこととして、`Show all results` が使用される場合でも、`Maximum results` は影響を及ぼします。これは、スクロールが開始される前のポップアップの高さを制限するからです。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Delay time -->

|

||||

<details>

|

||||

<summary>補完の遅れについて</summary>

|

||||

|

||||

設定によっては、リアルタイムのタグ補完は計算量が多くなることがあります。

|

||||

このオプションは debounce による遅延をミリ秒単位で設定します(1000ミリ秒 = 1秒)。このオプションは、入力が非常に速い場合に特に有効です。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Search for -->

|

||||

<details>

|

||||

<summary>"Search for" に関する設定</summary>

|

||||

|

||||

特定の補完タイプを有効または無効にします。

|

||||

|

||||

Umi AIワイルドカードは、使用目的が似ているため、異なるフォーマットを使用しますが、ここでは通常のワイルドカードオプションに含まれます。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Wiki links -->

|

||||

<details>

|

||||

<summary>"?" Wiki links</summary>

|

||||

|

||||

このオプションがオンになっている場合、タグの横に `?` リンクが表示されます。これをクリックすると、danbooruまたはe621のそのタグのWikiページを開こうとします。

|

||||

|

||||

> ⚠️ 警告:

|

||||

>

|

||||

> Danbooruとe621は外部サイトであり、多くのNSFWコンテンツを含んでいます。このため、このオプションはデフォルトで無効になっています。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Insertion -->

|

||||

<details>

|

||||

<summary>補完設定</summary>

|

||||

|

||||

これらの設定で、テキストの挿入方法を指定できます。

|

||||

|

||||

Booruのサイトでは、タグにスペースの代わりにアンダースコアを使用することがほとんどですが、Stable diffusionで使用されているCLIPエンコーダーは自然言語でトレーニングされているため、前処理中にほとんどのモデルがこのアンダースコアをスペースに置き換えました。したがって、デフォルトではタグのオートコンプリートも同じようになります。

|

||||

|

||||

括弧は、プロンプトの特定の部分をより注目/重視するために、Webuiの制御文字として使用されるため、デフォルトでは括弧を含むタグはエスケープされます (`\( \)`) 。

|

||||

|

||||

最後の設定によりますが、タグのオートコンプリートはタグを挿入した後にカンマとスペースを追加します。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Wildcard path mode -->

|

||||

<details>

|

||||

<summary>ワイルドカードのパス補完</summary>

|

||||

|

||||

ワイルドカードのいくつかのコレクションは、ネストしたサブフォルダに整理されています。

|

||||

それらは、"hair/colors/light/... " や "clothing/male/casual/... " などのように、ファイルへのフルパスとともにリストアップされています。

|

||||

|

||||

このような場合、手動でフルパスを入力するのは難しいことが多いのですが、それでもリストをさらにスクロールする前に選択範囲を狭めたいものです。

|

||||

この場合、選択する結果がすべてのパスであれば、<kbd>Tab</kbd>(または`ChooseSelectedOrFirst`のカスタムホットキー)でパスの部分補完をトリガーすることが可能です。

|

||||

|

||||

この設定は、補完に使用するモードを決定します:

|

||||

- 次のフォルダレベルまで:

|

||||

- パス内の次の/まで補完し、選択したものを進む方向として優先します

|

||||

- オプションを直接選択したい場合は、<kbd>Enter</kbd> キーまたは `ChooseSelected` ホットキーを使用してスキップし、完全に補完します。

|

||||

- 最初の差分まで:

|

||||

- 結果内の最初の違いまで補完し、追加の情報を待ちます

|

||||

- 例:"/sub/folder_a/..." と "/sub/folder_b/..." がある場合、"su" と入力した後に補完すると、"/sub/folder_" まですべてを挿入し、a または b を入力して明確にするまでそこで停止します。

|

||||

- 矢印キーで何かを選択した場合(EnterキーやTabキーを押すかどうかに関係なく)、それをスキップして完全に補完します。

|

||||

- 常に全て:

|

||||

- 名前が示すように、部分的な補完動作を無効にし、通常のタグのようにすべての状況下で完全なパスを挿入します。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Alias -->

|

||||

<details>

|

||||

<summary>Alias 設定</summary>

|

||||

|

||||

タグはしばしば複数の別名(Alias)で参照されます。`Search by alias`がオンになっている場合、それらは検索結果に含まれ、正しいタグがわからない場合に役立ちます。この場合でも、挿入時にリンクされている実際のタグに置き換えられます。

|

||||

|

||||

`Only show alias` セットは、エイリアスのみを表示したい場合、またはそのエイリアスがマップするタグも表示したい場合に使用します。

|

||||

(`<alias> ➝ <actual>`として表示されます)

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Translations -->

|

||||

<details>

|

||||

<summary>翻訳設定</summary>

|

||||

|

||||

Tag Autocompleteは、別のファイル(`Translation filename`)で指定されたタグの翻訳をサポートしています。つまり、英語のタグ名が分からなくても、自身の言語の翻訳ファイルがあれば、それを代わりに使うことができます。

|

||||

|

||||

また、コミュニティで使用されている古いファイルのためのレガシーフォーマットオプションや、プロンプト全体のライブ翻訳プレビューなど実験的な機能もあります。

|

||||

|

||||

詳細については、以下の [翻訳に関するセクション](#翻訳) を参照してください。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Extra file -->

|

||||

<details>

|

||||

<summary>Extra ファイル設定</summary>

|

||||

|

||||

ここで指定したように、通常の結果の前後に追加される追加タグのセットを指定します。一般的に使用される品質タグ (`masterpiece, best quality,` など) のような小さなカスタムタグセットに便利です。

|

||||

|

||||

長いプリセットやプロンプト全体を補完したい場合は、代わりに [Chants(詠唱)](#chants詠唱) を参照してください。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Chants -->

|

||||

<details>

|

||||

<summary>Chant ファイル名</summary>

|

||||

|

||||

Chantとは、長いプリセット、あるいはプロンプト全体を一度に選択して挿入できるもので、Webuiに内蔵されているスタイルのドロップダウンに似ています。Chantにはいくつかの追加機能があり、より速く使用することができます。

|

||||

|

||||

詳しくは上記の[Chants(詠唱)](#chants詠唱)のセクションを参照してください。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Hotkeys -->

|

||||

<details>

|

||||

<summary>Hotkeys</summary>

|

||||

|

||||

ほとんどのキーボードナビゲーション機能のホットキーをここで指定できます。

|

||||

https://www.w3.org/TR/uievents-key/#named-key-attribute-value

|

||||

|

||||

機能の説明

|

||||

- Move Up / Down:次のタグを選択

|

||||

- Jump Up / Down:一度に5箇所移動する。

|

||||

- Jump to Start / End: リストの先頭または末尾にジャンプ

|

||||

- ChooseSelected ハイライトされたタグを選択するか、何も選択されていない場合はポップアップを閉じます。

|

||||

- ChooseSelectedOrFirst:上記と同じですが、何も選択されていない場合、デフォルトで最初の結果が選択されます。

|

||||

- Close ポップアップを閉じる

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Colors -->

|

||||

<details>

|

||||

<summary>Colors</summary>

|

||||

|

||||

ここでは、異なるタグカテゴリーに使用されるデフォルトの色を変更することができます。これらは、ソースサイトのカテゴリの色に似ているように選択されています。

|

||||

|

||||

フォーマットは標準的なJSON

|

||||

- オブジェクト名は、タグのファイル名に対応しています。

|

||||

- 数字はタグの種類を表し、タグのソースに依存します。詳細については、[CSV tag data](#csv-tag-data)を参照してください。

|

||||

- 角括弧内の最初の値はダークモード、2番目の値はライトモードです。HTMLの色名と16進数コードのどちらでも使えます。

|

||||

|

||||

これは、カスタムタグ・ファイルに新しいカラーセットを追加するためにも使用できます。

|

||||

|

||||

|

||||

</details>

|

||||

<!-- Temp files refresh -->

|

||||

<details>

|

||||

<summary>TACの一時作成ファイルのリフレッシュ</summary>

|

||||

|

||||

これは "フェイク"設定で、実際には何も設定しません。むしろ、開発者がwebuiのオプションに追加できる更新ボタンを悪用するための小さなハックです。この設定の隣にある更新ボタンをクリックすると、タグオートコンプリートにいくつかの一時的な内部ファイルを再作成・再読み込みさせます。

|

||||

|

||||

タグオートコンプリートは様々な機能、特に余分なネットワークとワイルドカード補完に関連するこれらのファイルに依存しています。この設定は、例えば新しいLoRAをいくつかフォルダに追加し、タグ・オートコンプリートにリストを表示させるためにUIを再起動したくない場合に、リストを再構築するために使用できます。

|

||||

|

||||

また、この設定をクイック設定バーに追加することで、いつでも更新ボタンを利用できるようになります。

|

||||

|

||||

|

||||

</details>

|

||||

<br/>

|

||||

|

||||

# 翻訳

|

||||

タグとエイリアスの両方を翻訳するために使用することができ、また翻訳による検索を可能にするための、追加のファイルを翻訳セクションに追加することができます。

|

||||

このファイルは、`<英語のタグ/エイリアス>,<翻訳>`という形式のCSVである必要がありますが、3列のフォーマットを使用する古いファイルとの後方互換性のために、`oldFormat`をオンにすると代わりにそれを使うことができます。

|

||||

このファイルは、`<English tag/alias>,<Translation>`という形式のCSVである必要がありますが、3列のフォーマットを使用する古いファイルとの後方互換性のために、`oldFormat`をオンにすると、代わりに新しい2列の翻訳形式ではなく、古い3列の翻訳形式を使用するようになります。

|

||||

その場合、2番目のカラムは使用されず、パース時にスキップされます。

|

||||

|

||||

中国語の翻訳例:

|

||||

|

||||

|

||||

|

||||

|

||||

#### Extra file

|

||||

## 翻訳リスト

|

||||

- [🇨🇳 中国語訳](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/discussions/23) by @HalfMAI, 最も一般的なタグを機械翻訳と手作業で修正(レガシーフォーマットを使用)

|

||||

- [🇨🇳 中国語訳](https://github.com/sgmklp/tag-for-autocompletion-with-translation) by @sgmklp, [こちら](https://github.com/zcyzcy88/TagTable)をベースにして、より小さくした手動での翻訳セット。

|

||||

- [🇯🇵 日本語訳](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/discussions/265) by @applemango, 機械翻訳と人力翻訳の両方が利用可能。

|

||||

|

||||

> ### 🫵 あなたの助けが必要です!

|

||||

> 翻訳はコミュニティの努力により支えられています。もしあなたがタグファイルを翻訳したことがある場合、または作成したい場合は、あなたの成果をここに追加できるように、Pull RequestまたはIssueを開いてください。

|

||||

> 機械翻訳は、最も一般的なタグであっても、多くのことを間違えてしまいます。

|

||||

|

||||

## ライブ・プレビュー

|

||||

> ⚠️ 警告:

|

||||

>

|

||||

> この機能はまだ実験的なもので、使用中にバグに遭遇するかもしれません。

|

||||

|

||||

この機能はプロンプト内のすべての検出されたタグのライブプレビューを表示します。検出されたタグは、カンマで正しく区切られたものと長い文章の中にあるものの両方が表示されます。自然な文章では3単語まで検出することができ、1つのタグよりも複数単語の完全な一致を優先します。

|

||||

|

||||

検出されたタグの上には翻訳ファイルからの訳文が表示されるので、英語のタグの意味がよく分からない場合でも、プロンプトにタグが挿入された後でも(完了時のポップアップではなく)簡単に見つけることができます。

|

||||

|

||||

このオプションはデフォルトではオフになっていますが、翻訳ファイルを選択し、「Show live tag translation below prompt」をチェックすることで有効にすることができます。

|

||||

オフでも通常の機能には影響しません。

|

||||

|

||||

中国語翻訳時の例:

|

||||

|

||||

|

||||

|

||||

検出されたタグをクリックすると、そのタグがプロンプトで選択され、素早く編集できます。

|

||||

|

||||

|

||||

|

||||

#### ⚠️ ライブ翻訳に関する確認されている問題:

|

||||

ユーザーがテキストを入力または貼り付けると翻訳が更新されますが、プログラムによる操作(スタイルの適用やPNG Info / Image Browserからの読み込みなど)では更新されません。これは、プログラムによる編集の後に手動で何かを入力することで回避できます。

|

||||

|

||||

# Extra file

|

||||

エクストラファイルは、メインセットに含まれない新しいタグやカスタムタグを追加するために使用されます。

|

||||

[CSV tag data](#csv-tag-data)にある通常のタグのフォーマットと同じですが、ひとつだけ例外があります:

|

||||

カスタムタグにはカウントがないため、3列目(0から数える場合は2列目)はタグの横に表示される灰色のメタテキストに使用されます。

|

||||

@@ -213,7 +453,7 @@ Booruのサイトのように、タグは1つまたは複数のエイリアス

|

||||

|

||||

カスタムタグを通常のタグの前に追加するか、後に追加するかは、設定で選択することができます。

|

||||

|

||||

## CSV tag data

|

||||

# CSV tag data

|

||||

このスクリプトは、以下の方法で保存されたタグ付きCSVファイルを想定しています:

|

||||

```csv

|

||||

<name>,<type>,<postCount>,"<aliases>"

|

||||

@@ -252,3 +492,32 @@ commentary_request,5,2610959,

|

||||

|8 | Lore |

|

||||

|

||||

タグの種類は、結果の一覧のエントリーの色付けに使用されます。

|

||||

|

||||

## ⚠️ よくある問題、また現在確認されている問題:

|

||||

- お使いのブラウザの設定によっては、古いバージョンのスクリプトがキャッシュされることがあります。例えば、アップデート後に新機能が表示されない場合は、キャッシュを使わずにサイトを強制的にリロードするために、

|

||||

<kbd>CTRL</kbd> + <kbd>F5</kbd>

|

||||

を試してください。

|

||||

- プロンプトのポップアップが壊れたスタイルで表示されるか、全く表示されない場合([このような場合](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/assets/34448969/7bbfdd54-fc23-4bfc-85af-24704b139b3a))、openpose-editor 拡張機能がインストールされている場合は更新してください。古いバージョンでは他の拡張機能との間で問題が生じることが知られています。

|

||||

|

||||

<!-- Variable declarations for shorter main text -->

|

||||

[release-shield]: https://img.shields.io/github/v/release/DominikDoom/a1111-sd-webui-tagcomplete?logo=github&style=

|

||||

[release-url]: https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/releases

|

||||

|

||||

[contributors-shield]: https://img.shields.io/github/contributors/DominikDoom/a1111-sd-webui-tagcomplete

|

||||

[contributors-url]: https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/graphs/contributors

|

||||

|

||||

[forks-shield]: https://img.shields.io/github/forks/DominikDoom/a1111-sd-webui-tagcomplete

|

||||

[forks-url]: https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/network/members

|

||||

|

||||

[stargazers-shield]: https://img.shields.io/github/stars/DominikDoom/a1111-sd-webui-tagcomplete

|

||||

[stargazers-url]: https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/stargazers

|

||||

|

||||

[issues-shield]: https://img.shields.io/github/issues/DominikDoom/a1111-sd-webui-tagcomplete

|

||||

[issues-url]: https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/issues/new/choose

|

||||

|

||||

<!-- Links for feature section -->

|

||||

[image-browser-url]: https://github.com/AlUlkesh/stable-diffusion-webui-images-browser

|

||||

[multidiffusion-url]: https://github.com/pkuliyi2015/multidiffusion-upscaler-for-automatic1111

|

||||

[tag-editor-url]: https://github.com/toshiaki1729/stable-diffusion-webui-dataset-tag-editor

|

||||

[wd-tagger-url]: https://github.com/toriato/stable-diffusion-webui-wd14-tagger

|

||||

[umi-url]: https://github.com/Klokinator/Umi-AI

|

||||

|

||||

@@ -13,6 +13,12 @@

|

||||

你可以按照[以下方法](#installation)下载或拷贝文件,也可以使用[Releases](https://github.com/DominikDoom/a1111-sd-webui-tagcomplete/releases)中打包好的文件。

|

||||

|

||||

## 常见问题 & 已知缺陷:

|

||||

- 很多中国用户都报告过此扩展名和其他扩展名的 JavaScript 文件被阻止的问题。

|

||||

常见的罪魁祸首是 IDM / Internet Download Manager 浏览器插件,它似乎出于安全目的阻止了本地文件请求。

|

||||

如果您安装了 IDM,请确保在使用 webui 时禁用以下插件:

|

||||

|

||||

|

||||

|

||||

- 当`replaceUnderscores`选项开启时, 脚本只会替换Tag的一部分如果Tag包含多个单词,比如将`atago (azur lane)`修改`atago`为`taihou`并使用自动补全时.会得到 `taihou (azur lane), lane)`的结果, 因为脚本没有把后面的部分认为成同一个Tag。

|

||||

|

||||

## 演示与截图

|

||||

|

||||

@@ -1,6 +1,8 @@

|

||||

// Core components

|

||||

var TAC_CFG = null;

|

||||

var tagBasePath = "";

|

||||

var modelKeywordPath = "";

|

||||

var tacSelfTrigger = false;

|

||||

|

||||

// Tag completion data loaded from files

|

||||

var allTags = [];

|

||||

@@ -10,11 +12,14 @@ var extras = [];

|

||||

var wildcardFiles = [];

|

||||

var wildcardExtFiles = [];

|

||||

var yamlWildcards = [];

|

||||

var umiWildcards = [];

|

||||

var embeddings = [];

|

||||

var hypernetworks = [];

|

||||

var loras = [];

|

||||

var lycos = [];

|

||||

var modelKeywordDict = new Map();

|

||||

var chants = [];

|

||||

var styleNames = [];

|

||||

|

||||

// Selected model info for black/whitelisting

|

||||

var currentModelHash = "";

|

||||

@@ -33,6 +38,13 @@ let hideBlocked = false;

|

||||

// Tag selection for keyboard navigation

|

||||

var selectedTag = null;

|

||||

var oldSelectedTag = null;

|

||||

var resultCountBeforeNormalTags = 0;

|

||||

|

||||

// Lora keyword undo/redo history

|

||||

var textBeforeKeywordInsertion = "";

|

||||

var textAfterKeywordInsertion = "";

|

||||

var lastEditWasKeywordInsertion = false;

|

||||

var keywordInsertionUndone = false;

|

||||

|

||||

// UMI

|

||||

var umiPreviousTags = [];

|

||||

|

||||

@@ -8,10 +8,12 @@ const ResultType = Object.freeze({

|

||||

"wildcardTag": 4,

|

||||

"wildcardFile": 5,

|

||||

"yamlWildcard": 6,

|

||||

"hypernetwork": 7,

|

||||

"lora": 8,

|

||||

"lyco": 9,

|

||||

"chant": 10

|

||||

"umiWildcard": 7,

|

||||

"hypernetwork": 8,

|

||||

"lora": 9,

|

||||

"lyco": 10,

|

||||

"chant": 11,

|

||||

"styleName": 12

|

||||

});

|

||||

|

||||

// Class to hold result data and annotations to make it clearer to use

|

||||

@@ -22,9 +24,12 @@ class AutocompleteResult {

|

||||

|

||||

// Additional info, only used in some cases

|

||||

category = null;

|

||||

count = null;

|

||||

count = Number.MAX_SAFE_INTEGER;

|

||||

usageBias = null;

|

||||

aliases = null;

|

||||

meta = null;

|

||||

hash = null;

|

||||

sortKey = null;

|

||||

|

||||

// Constructor

|

||||

constructor(text, type) {

|

||||

|

||||

@@ -9,7 +9,11 @@ const core = [

|

||||

"#img2img_prompt > label > textarea",

|

||||

"#txt2img_neg_prompt > label > textarea",

|

||||

"#img2img_neg_prompt > label > textarea",

|

||||

".prompt > label > textarea"

|

||||

".prompt > label > textarea",

|

||||

"#txt2img_edit_style_prompt > label > textarea",

|

||||

"#txt2img_edit_style_neg_prompt > label > textarea",

|

||||

"#img2img_edit_style_prompt > label > textarea",

|

||||

"#img2img_edit_style_neg_prompt > label > textarea"

|

||||

];

|

||||

|

||||

// Third party text area selectors

|

||||

@@ -57,6 +61,31 @@ const thirdParty = {

|

||||

"[id^=MD-i2i][id$=prompt] textarea",

|

||||

"[id^=MD-i2i][id$=prompt] input[type='text']"

|

||||

]

|

||||

},

|

||||

"adetailer-t2i": {

|

||||

"base": "#txt2img_script_container",

|

||||

"hasIds": true,

|

||||

"onDemand": true,

|

||||

"selectors": [

|

||||

"[id^=script_txt2img_adetailer_ad_prompt] textarea",

|

||||

"[id^=script_txt2img_adetailer_ad_negative_prompt] textarea"

|

||||

]

|

||||

},

|

||||

"adetailer-i2i": {

|

||||

"base": "#img2img_script_container",

|

||||

"hasIds": true,

|

||||

"onDemand": true,

|

||||

"selectors": [

|

||||

"[id^=script_img2img_adetailer_ad_prompt] textarea",

|

||||

"[id^=script_img2img_adetailer_ad_negative_prompt] textarea"

|

||||

]

|

||||

},

|

||||

"deepdanbooru-object-recognition": {

|

||||

"base": "#tab_deepdanboru_object_recg_tab",

|

||||

"hasIds": false,

|

||||

"selectors": [

|

||||

"Found tags",

|

||||

]

|

||||

}

|

||||

}

|

||||

|

||||

@@ -90,7 +119,7 @@ function addOnDemandObservers(setupFunction) {

|

||||

|

||||

let base = gradioApp().querySelector(entry.base);

|

||||

if (!base) continue;

|

||||

|

||||

|

||||

let accordions = [...base?.querySelectorAll(".gradio-accordion")];

|

||||

if (!accordions) continue;

|

||||

|

||||

@@ -115,12 +144,12 @@ function addOnDemandObservers(setupFunction) {

|

||||

[...gradioApp().querySelectorAll(entry.selectors.join(", "))].forEach(x => setupFunction(x));

|

||||

} else { // Otherwise, we have to find the text areas by their adjacent labels

|

||||

let base = gradioApp().querySelector(entry.base);

|

||||

|

||||

|

||||

// Safety check

|

||||

if (!base) continue;

|

||||

|

||||

|

||||

let allTextAreas = [...base.querySelectorAll("textarea, input[type='text']")];

|

||||

|

||||

|

||||

// Filter the text areas where the adjacent label matches one of the selectors

|

||||

let matchingTextAreas = allTextAreas.filter(ta => [...ta.parentElement.childNodes].some(x => entry.selectors.includes(x.innerText)));

|

||||

matchingTextAreas.forEach(x => setupFunction(x));

|

||||

@@ -165,4 +194,4 @@ function getTextAreaIdentifier(textArea) {

|

||||

break;

|

||||

}

|

||||

return modifier;

|

||||

}

|

||||

}

|

||||

|

||||

@@ -1,13 +1,14 @@

|

||||

// Utility functions for tag autocomplete

|

||||

|

||||

// Parse the CSV file into a 2D array. Doesn't use regex, so it is very lightweight.

|

||||

// We are ignoring newlines in quote fields since we expect one-line entries and parsing would break for unclosed quotes otherwise

|

||||

function parseCSV(str) {

|

||||

var arr = [];

|

||||

var quote = false; // 'true' means we're inside a quoted field

|

||||

const arr = [];

|

||||

let quote = false; // 'true' means we're inside a quoted field

|

||||

|

||||

// Iterate over each character, keep track of current row and column (of the returned array)

|

||||

for (var row = 0, col = 0, c = 0; c < str.length; c++) {

|

||||

var cc = str[c], nc = str[c + 1]; // Current character, next character

|

||||

for (let row = 0, col = 0, c = 0; c < str.length; c++) {

|

||||

let cc = str[c], nc = str[c+1]; // Current character, next character

|

||||

arr[row] = arr[row] || []; // Create a new row if necessary

|

||||

arr[row][col] = arr[row][col] || ''; // Create a new column (start with empty string) if necessary

|

||||

|

||||

@@ -22,14 +23,12 @@ function parseCSV(str) {

|

||||

// If it's a comma and we're not in a quoted field, move on to the next column

|

||||

if (cc == ',' && !quote) { ++col; continue; }

|

||||

|

||||

// If it's a newline (CRLF) and we're not in a quoted field, skip the next character

|

||||

// and move on to the next row and move to column 0 of that new row

|

||||

if (cc == '\r' && nc == '\n' && !quote) { ++row; col = 0; ++c; continue; }

|

||||

// If it's a newline (CRLF), skip the next character and move on to the next row and move to column 0 of that new row

|

||||

if (cc == '\r' && nc == '\n') { ++row; col = 0; ++c; quote = false; continue; }

|

||||

|

||||

// If it's a newline (LF or CR) and we're not in a quoted field,

|

||||

// move on to the next row and move to column 0 of that new row

|

||||

if (cc == '\n' && !quote) { ++row; col = 0; continue; }

|

||||

if (cc == '\r' && !quote) { ++row; col = 0; continue; }

|

||||

// If it's a newline (LF or CR) move on to the next row and move to column 0 of that new row

|

||||

if (cc == '\n') { ++row; col = 0; quote = false; continue; }

|

||||

if (cc == '\r') { ++row; col = 0; quote = false; continue; }

|

||||

|

||||

// Otherwise, append the current character to the current column

|

||||

arr[row][col] += cc;

|

||||

@@ -41,7 +40,7 @@ function parseCSV(str) {

|

||||

async function readFile(filePath, json = false, cache = false) {

|

||||

if (!cache)

|

||||

filePath += `?${new Date().getTime()}`;

|

||||

|

||||

|

||||

let response = await fetch(`file=${filePath}`);

|

||||

|

||||

if (response.status != 200) {

|

||||

@@ -61,6 +60,92 @@ async function loadCSV(path) {

|

||||

return parseCSV(text);

|

||||

}

|

||||

|

||||

// Fetch API

|

||||

async function fetchAPI(url, json = true, cache = false) {

|

||||

if (!cache) {

|

||||

const appendChar = url.includes("?") ? "&" : "?";

|

||||

url += `${appendChar}${new Date().getTime()}`

|

||||

}

|

||||

|

||||

let response = await fetch(url);

|

||||

|

||||

if (response.status != 200) {

|

||||

console.error(`Error fetching API endpoint "${url}": ` + response.status, response.statusText);

|

||||

return null;

|

||||

}

|

||||

|

||||

if (json)

|

||||

return await response.json();

|

||||

else

|

||||

return await response.text();

|

||||

}

|

||||

|

||||

async function postAPI(url, body = null) {

|

||||

let response = await fetch(url, {

|

||||

method: "POST",

|

||||

headers: {'Content-Type': 'application/json'},

|

||||

body: body

|

||||

});

|

||||

|

||||

if (response.status != 200) {

|

||||

console.error(`Error posting to API endpoint "${url}": ` + response.status, response.statusText);

|

||||

return null;

|

||||

}

|

||||

|

||||

return await response.json();

|

||||

}

|

||||

|

||||

async function putAPI(url, body = null) {

|

||||

let response = await fetch(url, { method: "PUT", body: body });

|

||||

|

||||

if (response.status != 200) {

|

||||

console.error(`Error putting to API endpoint "${url}": ` + response.status, response.statusText);

|

||||

return null;

|

||||

}

|

||||

|

||||

return await response.json();

|

||||

}

|

||||

|

||||

// Extra network preview thumbnails

|

||||

async function getExtraNetworkPreviewURL(filename, type) {

|

||||

const previewJSON = await fetchAPI(`tacapi/v1/thumb-preview/${filename}?type=${type}`, true, true);

|

||||

if (previewJSON?.url) {

|

||||

const properURL = `sd_extra_networks/thumb?filename=${previewJSON.url}`;

|

||||

if ((await fetch(properURL)).status == 200) {

|

||||

return properURL;

|

||||

} else {

|

||||

// create blob url

|

||||

const blob = await (await fetch(`tacapi/v1/thumb-preview-blob/${filename}?type=${type}`)).blob();

|

||||

return URL.createObjectURL(blob);

|

||||

}

|

||||

} else {

|

||||

return null;

|

||||

}

|

||||

}

|

||||

|

||||

lastStyleRefresh = 0;

|

||||

// Refresh style file if needed

|

||||

async function refreshStyleNamesIfChanged() {

|

||||

// Only refresh once per second

|

||||

currentTimestamp = new Date().getTime();

|

||||

if (currentTimestamp - lastStyleRefresh < 1000) return;

|

||||

lastStyleRefresh = currentTimestamp;

|

||||

|

||||

const response = await fetch(`tacapi/v1/refresh-styles-if-changed?${new Date().getTime()}`)

|

||||

if (response.status === 304) {

|

||||

// Not modified

|

||||

} else if (response.status === 200) {

|

||||

// Reload

|

||||

QUEUE_FILE_LOAD.forEach(async fn => {

|

||||

if (fn.toString().includes("styleNames"))

|

||||

await fn.call(null, true);

|

||||

})

|

||||

} else {

|

||||

// Error

|

||||

console.error(`Error refreshing styles.txt: ` + response.status, response.statusText);

|

||||

}

|

||||

}

|

||||

|

||||

// Debounce function to prevent spamming the autocomplete function

|

||||

var dbTimeOut;

|

||||

const debounce = (func, wait = 300) => {

|

||||

@@ -89,6 +174,126 @@ function difference(a, b) {

|

||||

)].reduce((acc, [v, count]) => acc.concat(Array(Math.abs(count)).fill(v)), []);

|

||||

}

|

||||

|

||||

// Object flatten function adapted from https://stackoverflow.com/a/61602592

|

||||

// $roots keeps previous parent properties as they will be added as a prefix for each prop.

|

||||

// $sep is just a preference if you want to seperate nested paths other than dot.

|

||||

function flatten(obj, roots = [], sep = ".") {

|

||||

return Object.keys(obj).reduce(

|

||||

(memo, prop) =>

|

||||

Object.assign(

|

||||

// create a new object

|

||||

{},

|

||||

// include previously returned object

|

||||

memo,

|

||||

Object.prototype.toString.call(obj[prop]) === "[object Object]"

|

||||

? // keep working if value is an object

|

||||

flatten(obj[prop], roots.concat([prop]), sep)

|

||||

: // include current prop and value and prefix prop with the roots

|

||||

{ [roots.concat([prop]).join(sep)]: obj[prop] }

|

||||

),

|

||||

{}

|

||||

);

|

||||

}

|

||||

|

||||

// Calculate biased tag score based on post count and frequent usage

|

||||

function calculateUsageBias(result, count, uses) {

|

||||

// Check setting conditions

|

||||

if (uses < TAC_CFG.frequencyMinCount) {

|

||||

uses = 0;

|

||||

} else if (uses != 0) {

|

||||

result.usageBias = true;

|

||||

}

|

||||

|

||||

switch (TAC_CFG.frequencyFunction) {

|

||||

case "Logarithmic (weak)":

|

||||

return Math.log(1 + count) + Math.log(1 + uses);

|

||||

case "Logarithmic (strong)":

|

||||

return Math.log(1 + count) + 2 * Math.log(1 + uses);

|

||||

case "Usage first":

|

||||

return uses;

|

||||

default:

|

||||

return count;

|

||||

}

|

||||

}

|

||||

// Beautify return type for easier parsing

|

||||

function mapUseCountArray(useCounts, posAndNeg = false) {

|

||||

return useCounts.map(useCount => {

|

||||

if (posAndNeg) {

|

||||

return {

|

||||

"name": useCount[0],

|

||||

"type": useCount[1],

|

||||

"count": useCount[2],

|

||||

"negCount": useCount[3],

|

||||

"lastUseDate": useCount[4]

|

||||

}

|

||||

}

|

||||

return {

|

||||

"name": useCount[0],

|

||||

"type": useCount[1],

|

||||

"count": useCount[2],

|

||||

"lastUseDate": useCount[3]

|

||||

}

|

||||

});

|

||||

}

|

||||

// Call API endpoint to increase bias of tag in the database

|

||||

function increaseUseCount(tagName, type, negative = false) {

|

||||

postAPI(`tacapi/v1/increase-use-count?tagname=${tagName}&ttype=${type}&neg=${negative}`);

|

||||

}

|

||||

// Get use count of tag from the database

|

||||

async function getUseCount(tagName, type, negative = false) {

|

||||

return (await fetchAPI(`tacapi/v1/get-use-count?tagname=${tagName}&ttype=${type}&neg=${negative}`, true, false))["result"];

|

||||

}

|

||||

async function getUseCounts(tagNames, types, negative = false) {

|

||||

// While semantically weird, we have to use POST here for the body, as urls are limited in length

|

||||

const body = JSON.stringify({"tagNames": tagNames, "tagTypes": types, "neg": negative});

|

||||

const rawArray = (await postAPI(`tacapi/v1/get-use-count-list`, body))["result"]

|

||||

return mapUseCountArray(rawArray);

|

||||

}

|

||||

async function getAllUseCounts() {

|

||||

const rawArray = (await fetchAPI(`tacapi/v1/get-all-use-counts`))["result"];

|

||||

return mapUseCountArray(rawArray, true);

|

||||

}

|

||||

async function resetUseCount(tagName, type, resetPosCount, resetNegCount) {

|

||||

await putAPI(`tacapi/v1/reset-use-count?tagname=${tagName}&ttype=${type}&pos=${resetPosCount}&neg=${resetNegCount}`);

|

||||

}

|

||||

|

||||

function createTagUsageTable(tagCounts) {

|

||||

// Create table

|

||||

let tagTable = document.createElement("table");

|

||||

tagTable.innerHTML =

|

||||

`<thead>

|

||||

<tr>

|

||||

<td>Name</td>

|

||||

<td>Type</td>

|

||||

<td>Count(+)</td>

|

||||

<td>Count(-)</td>

|

||||

<td>Last used</td>

|

||||

</tr>

|

||||

</thead>`;

|

||||

tagTable.id = "tac_tagUsageTable"

|

||||

|

||||

tagCounts.forEach(t => {

|

||||

let tr = document.createElement("tr");

|

||||

|

||||

// Fill values

|

||||

let values = [t.name, t.type-1, t.count, t.negCount, t.lastUseDate]

|

||||

values.forEach(v => {

|

||||

let td = document.createElement("td");

|

||||

td.innerText = v;

|

||||

tr.append(td);

|

||||

});

|

||||

// Add delete/reset button

|

||||

let delButton = document.createElement("button");

|

||||

delButton.innerText = "🗑️";

|

||||

delButton.title = "Reset count";

|

||||

tr.append(delButton);

|

||||

|

||||

tagTable.append(tr)

|

||||

});

|

||||

|

||||

return tagTable;

|

||||

}

|

||||

|

||||

// Sliding window function to get possible combination groups of an array

|

||||

function toNgrams(inputArray, size) {

|

||||

return Array.from(

|

||||

@@ -97,7 +302,11 @@ function toNgrams(inputArray, size) {

|

||||

);

|

||||

}

|

||||

|

||||

function escapeRegExp(string) {

|

||||

function escapeRegExp(string, wildcardMatching = false) {

|

||||

if (wildcardMatching) {

|

||||

// Escape all characters except asterisks and ?, which should be treated separately as placeholders.

|

||||

return string.replace(/[-[\]{}()+.,\\^$|#\s]/g, '\\$&').replace(/\*/g, '.*').replace(/\?/g, '.');

|

||||

}

|

||||

return string.replace(/[.*+?^${}()|[\]\\]/g, '\\$&'); // $& means the whole matched string

|

||||

}

|

||||

function escapeHTML(unsafeText) {

|

||||

@@ -141,6 +350,49 @@ function observeElement(element, property, callback, delay = 0) {

|

||||

}

|

||||

}

|

||||

|

||||

// Sort functions

|

||||

function getSortFunction() {

|

||||

let criterion = TAC_CFG.modelSortOrder || "Name";

|

||||

|

||||

const textSort = (a, b, reverse = false) => {

|

||||

// Assign keys so next sort is faster